The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Image Sensor Technology interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Image Sensor Technology Interview

Q 1. Explain the difference between CMOS and CCD image sensors.

Both CMOS (Complementary Metal-Oxide-Semiconductor) and CCD (Charge-Coupled Device) image sensors are used to convert light into electrical signals, forming the basis of digital imaging. However, they differ significantly in their architecture and operational principles. Think of it like this: CCD is like a bucket brigade, carefully passing charges along a line, while CMOS is like a team of individual workers, each processing their own portion of the image simultaneously.

In a CCD, photons hitting the photodiodes generate charges that are then physically moved (transferred) across the sensor’s surface to a readout register. This sequential process ensures high image quality but can be slow. CMOS, on the other hand, integrates the amplification and analog-to-digital conversion circuitry directly onto each pixel. This means each pixel processes its signal independently and concurrently, allowing for faster readout speeds and on-chip processing capabilities.

In essence, CCD excels in image quality, especially at low light, due to its superior signal-to-noise ratio, while CMOS provides speed and processing advantages, making it dominant in most modern applications.

Q 2. Describe the key components of a CMOS image sensor.

A CMOS image sensor consists of several key components working together to capture and process light:

- Photodiodes: These are the light-sensitive elements that convert incoming photons into electrons. Imagine them as tiny buckets collecting light.

- Microlenses: These tiny lenses focus light onto the photodiodes, increasing light collection efficiency. Think of them as magnifying glasses for each pixel.

- Color Filter Array (CFA): A pattern of color filters (usually Bayer pattern) placed over the photodiodes, allowing each pixel to sense only one color (red, green, or blue). This is crucial for color image reproduction. It’s like having specialized ‘buckets’ that only collect specific color information.

- Amplification Circuitry: This circuitry amplifies the weak electrical signals generated by the photodiodes, strengthening the image signal before conversion to digital data. Think of this as a signal booster.

- Analog-to-Digital Converter (ADC): This converts the amplified analog signal into a digital signal that can be processed by a computer or other digital device. This is the final step in transforming light into numbers that a computer understands.

- Readout Circuitry: This part manages the transfer of the digital data from the pixels to the image processor. It’s like the transport system for all the collected digital information.

Q 3. What are the advantages and disadvantages of CMOS and CCD image sensors?

CMOS Advantages:

- Lower Power Consumption: Generally consumes less power than CCD, making it ideal for mobile devices and battery-powered applications.

- Faster Readout Speed: Simultaneous signal processing leads to higher frame rates.

- On-chip Processing: Allows for image processing directly on the sensor, reducing external processing requirements.

- Lower Manufacturing Cost: CMOS fabrication is generally cheaper and easier than CCD fabrication.

CMOS Disadvantages:

- Higher Noise Level: Typically suffers from higher noise compared to CCD, particularly at low light levels.

- Lower Quantum Efficiency: Generally less efficient in converting photons to electrons compared to CCD, resulting in a less sensitive sensor.

CCD Advantages:

- Higher Quantum Efficiency: Excellent light sensitivity, making them suitable for low-light imaging.

- Lower Noise: Generally produces cleaner images with lower noise.

CCD Disadvantages:

- Higher Power Consumption: Consumes significantly more power than CMOS.

- Slower Readout Speed: Sequential readout limits frame rates.

- Higher Manufacturing Cost: More complex and expensive to manufacture.

The best choice depends on the application. High-end cameras and scientific imaging often favor CCD for its superior image quality, while most consumer electronics and mobile devices utilize CMOS for its speed and cost-effectiveness.

Q 4. Explain the concept of Quantum Efficiency (QE) in image sensors.

Quantum Efficiency (QE) is a crucial parameter that describes how effectively an image sensor converts incident photons (light particles) into electrons. It’s expressed as a percentage and indicates the probability that a photon hitting the sensor will generate an electron-hole pair, contributing to the signal. A higher QE translates to a more sensitive sensor, capturing more detail in low-light conditions.

For example, a sensor with 80% QE means that 80% of the photons striking the sensor will generate a measurable electron signal. The remaining 20% are either lost due to reflection, absorption in non-photosensitive layers, or fail to create an electron-hole pair. QE varies with wavelength, meaning the sensor’s efficiency can differ for different colors of light.

In practical terms, a higher QE is desirable for applications like astrophotography or surveillance where low-light performance is critical. Manufacturers strive to improve QE through advancements in sensor materials and design.

Q 5. How does pixel size affect image quality?

Pixel size directly impacts image quality. Larger pixels collect more light, resulting in improved signal-to-noise ratio (SNR) and dynamic range. This translates to cleaner images with better detail, particularly in low-light conditions. Think of it as having larger ‘buckets’ to collect light; larger buckets mean better light collection and less noise.

Conversely, smaller pixels collect less light, leading to a lower SNR and dynamic range. Images captured with smaller pixels might appear noisier and lack detail, especially in low light. However, smaller pixels allow for higher resolution, meaning more pixels packed into the same sensor area, enabling capturing more detail of the scene.

Therefore, the optimal pixel size depends on the application’s needs. High-resolution cameras for detailed images might prioritize smaller pixels, while cameras for low-light performance might focus on larger pixels, even at the cost of lower resolution.

Q 6. What is the role of the microlens in an image sensor?

Microlenses are tiny lenses positioned on top of each photodiode in an image sensor. Their primary function is to concentrate the incoming light onto the photodiode’s active area, significantly improving light collection efficiency. Think of them as tiny magnifying glasses focusing light onto the ‘buckets’ (photodiodes).

Without microlenses, a significant portion of the incident light would be lost, scattered, or fall outside the photosensitive area of the photodiode, leading to a reduction in image quality and sensitivity. Microlenses ensure that more photons reach the photodiode, maximizing the conversion of light into electrical signals and boosting both image quality and sensitivity, particularly in low-light settings.

Q 7. Explain the function of the fill factor in an image sensor.

The fill factor refers to the proportion of the pixel’s area that is actually photosensitive. It’s expressed as a percentage and represents the ratio of the active photodiode area to the total pixel area. A higher fill factor means a larger portion of the pixel is actively collecting light.

For example, a fill factor of 50% means that only half the area of each pixel is actively capturing light; the rest is occupied by other components like metal wiring or gaps between photodiodes. A higher fill factor generally leads to better image quality due to improved light collection efficiency and reduced crosstalk (interference between adjacent pixels). However, increasing the fill factor can be challenging from a fabrication perspective.

In practice, engineers strive to maximize the fill factor to improve image sensitivity and reduce noise, though design constraints and technological limits often restrict the achievable fill factor.

Q 8. What are the different types of noise in image sensors?

Image sensor noise is essentially unwanted signal that degrades image quality. Think of it like static on an old radio – it obscures the actual signal (the image) you want to hear (see).

- Read Noise: This is inherent noise generated by the electronics of the sensor itself during the readout process. It’s like a persistent hum in your radio. It’s independent of light levels.

- Dark Current Noise (Shot Noise): This is noise produced by the generation of electrons in the sensor’s photodiodes even in the absence of light. Imagine the radio picking up stray signals even when it’s not tuned to a station. It increases with temperature and integration time.

- Photon Shot Noise: This is the fundamental limit to image quality, arising from the discrete nature of light. Photons arrive randomly, creating a statistical fluctuation in the signal. It’s like the occasional popping sound in a radio transmission – unavoidable, but manageable.

- Fixed Pattern Noise (FPN): This is a non-uniformity in the sensor’s response across its pixels. Some pixels might be consistently brighter or darker than others, creating a pattern in the noise. Imagine if one speaker in your stereo system is always louder than the other.

These noise sources combine to affect the final image. Reducing their impact is a key aspect of image sensor design and processing.

Q 9. How do you reduce noise in image sensors?

Noise reduction in image sensors involves a multi-pronged approach combining hardware and software techniques.

- Hardware Techniques: These are implemented during sensor design and manufacturing. Examples include using high-quality components to reduce read noise, cooling the sensor to minimize dark current, and employing pixel architectures that reduce FPN.

- Software Techniques: These are applied during post-processing of the image data. Common methods include:

- Averaging: Taking multiple images and averaging them reduces random noise, like photon shot noise.

- Filtering: Applying digital filters (e.g., Gaussian, median) smooths the image, reducing high-frequency noise components. However, this can blur sharp details.

- Noise Cancellation Algorithms: More sophisticated algorithms analyze the image to identify and subtract noise patterns, often targeting specific types of noise like FPN.

- Denoising AI: Advanced machine learning techniques are increasingly used to achieve better denoising results, learning complex noise patterns and separating them from image details.

The optimal noise reduction strategy depends on the specific application and the balance between noise reduction and detail preservation. For example, a medical imaging system might prioritize low noise over perfect sharpness, while a camera for wildlife photography might favor sharp details even at the cost of slightly higher noise.

Q 10. Explain the concept of dark current in image sensors.

Dark current is the generation of electrons in a photodiode even in the absence of light. Imagine a faulty light switch that occasionally flickers on even when it’s supposed to be off. This is due to thermal effects within the sensor; higher temperatures lead to more electrons being generated, resulting in a higher dark current.

This dark current adds to the signal, appearing as a uniform brightness across the image, and it contributes significantly to dark current noise. The level of dark current is highly dependent on factors such as temperature and the sensor’s material properties. Reducing dark current is crucial for low-light imaging. It’s often addressed by cooling the sensor, using specialized materials, or employing advanced circuit designs.

Q 11. What is blooming and how is it mitigated?

Blooming occurs when an extremely bright light source overwhelms a photodiode’s capacity to hold charge. Think of it like overflowing a bucket – the excess charge spills over into neighboring pixels, creating bright streaks or halos around the bright source.

Mitigation strategies include:

- Microlenses: Efficiently focus incident light onto the photodiodes, minimizing the chance of overload.

- Anti-blooming structures: These are designed into the sensor to provide a drain for excess charge, preventing it from overflowing into neighboring pixels. It’s like adding a drain hole to the bottom of the bucket.

- Software correction: Although not ideal, sophisticated algorithms can sometimes identify and correct blooming artifacts in the image post-processing.

The choice of mitigation strategy depends on factors such as the sensor’s design, the targeted application, and the cost constraints.

Q 12. Describe the process of image sensor calibration.

Image sensor calibration involves correcting systematic errors and inconsistencies in the sensor’s response. It ensures that the sensor accurately captures the scene’s light. Think of it like calibrating a scale to ensure it accurately measures weight.

Calibration usually involves several steps:

- Dark Current Correction: Subtracting the dark current signal from the measured image data to remove the uniform brightness caused by thermal electrons.

- Fixed Pattern Noise (FPN) Correction: Identifying and correcting for non-uniformities in pixel response, often using a flat-field image (an image of a uniformly illuminated surface). This compensates for the inconsistencies among the pixels.

- Gain and Offset Correction: Adjusting the sensor’s response to ensure linear relationship between light intensity and the output signal. Gain refers to the sensor’s sensitivity, while offset corrects for any baseline signal.

- Color Correction (for color sensors): Adjusting the response of different color filters to ensure accurate color reproduction.

Calibration methods can range from simple offset and gain adjustments to complex algorithms utilizing machine learning and multiple calibration images. Accurate calibration is essential for producing high-quality, reliable images.

Q 13. What are the different types of image sensor outputs?

Image sensor outputs vary depending on the sensor’s design and intended use. The most common types include:

- Analog voltage: The sensor produces an analog voltage signal proportional to the light intensity for each pixel. This requires an analog-to-digital converter (ADC) to convert the signal into digital data for processing.

- Digital data: The sensor directly outputs digital data representing the light intensity for each pixel. This simplifies the signal processing chain.

- Raw data (Bayer pattern): For color sensors, the raw output is a Bayer pattern, where each pixel only senses one color (red, green, or blue). This pattern requires demosaicing to create a full-color image.

The choice of output type affects the sensor’s cost, power consumption, and the complexity of the subsequent image processing.

Q 14. Explain the concept of image signal processing (ISP).

Image Signal Processing (ISP) is a crucial step in transforming raw sensor data into a visually appealing and usable image. It’s like a post-production studio for your sensor’s raw output. It takes the noisy, raw data from the sensor and applies various algorithms to enhance and refine it.

Key functions of an ISP include:

- Demosaicing: Converting the raw Bayer pattern (for color sensors) into a full-color image by interpolating the missing color information.

- Noise Reduction: Implementing various algorithms (as discussed earlier) to minimize noise and improve image quality.

- White Balance: Correcting for color casts caused by different light sources.

- Color Correction: Adjusting colors to match a standard color space.

- Sharpening: Enhancing edges and details in the image.

- Gamma Correction: Adjusting the image’s brightness and contrast for better visual appearance.

- Auto Exposure and Auto White Balance (AE/AWB): Automatically adjusting the exposure and white balance to produce well-exposed and properly colored images.

ISPs are often implemented in dedicated hardware or software, and their sophistication directly impacts the quality of the final image. Advancements in ISP algorithms are constantly pushing the boundaries of image quality and computational photography.

Q 15. What are some common ISP algorithms?

Image Signal Processors (ISPs) contain a suite of algorithms crucial for transforming raw sensor data into high-quality images. These algorithms are complex and highly optimized for speed and efficiency. Here are some common examples:

Demosaicing: This algorithm interpolates color information from the sensor’s Bayer pattern (or other color filter array) to create a full-color image. Think of it like filling in the gaps in a coloring book to reveal the full picture. Different algorithms, like bilinear interpolation, bicubic interpolation, or more advanced methods using edge detection, offer varying degrees of accuracy and computational complexity.

White Balance: This corrects color casts caused by different light sources (incandescent, fluorescent, daylight). It ensures that white objects appear white in the final image, regardless of the lighting conditions. This involves adjusting the color channels to achieve a neutral white point.

Auto Exposure (AE): This algorithm determines the optimal exposure time and gain to capture a properly exposed image. It analyzes the scene brightness and adjusts the sensor settings accordingly. Think of it like your camera automatically adjusting its settings when you move from a dark room to a bright outdoors.

Auto White Balance (AWB): This automatically adjusts the white balance based on the scene’s color temperature. It’s a more sophisticated version of white balance and uses scene analysis to determine the most appropriate white balance setting.

Noise Reduction: This reduces noise artifacts, like graininess, that are inherent in digital images. Different techniques, including spatial filtering (averaging pixels) and temporal filtering (comparing frames), are used to improve image clarity. Advanced techniques utilize machine learning to intelligently remove noise without blurring important details.

Sharpening: This enhances the edges and details in an image to make them appear sharper and more defined. Edge detection algorithms are used to identify and emphasize sharp transitions in brightness.

Gamma Correction: This adjusts the brightness levels to match human perception, making the image appear more natural and pleasing to the eye. It corrects the non-linear response of the sensor to light.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How does sensor architecture impact image quality?

Sensor architecture significantly impacts image quality. Different architectures offer trade-offs in terms of resolution, speed, sensitivity, and cost. Key architectural aspects include:

Pixel Size: Larger pixels generally capture more light, leading to improved low-light performance and dynamic range. Smaller pixels allow for higher resolution but might suffer from reduced light sensitivity and increased noise.

Microlens Design: The microlenses focus light onto the photodiodes. Efficient microlens design improves light collection efficiency and reduces crosstalk between pixels.

On-chip Processing: Integrating processing elements directly onto the sensor allows for faster processing and reduced data transfer requirements. This can enable features like on-chip defect correction or noise reduction.

Sensor Type (CMOS vs. CCD): CMOS sensors are more common in modern cameras due to their lower power consumption, on-chip processing capabilities, and higher integration density. CCD sensors traditionally offered superior image quality but are more power-hungry.

For example, a larger sensor with larger pixels will generally result in better image quality in low-light conditions compared to a smaller sensor with smaller pixels, even if the smaller sensor has a higher resolution. The choice of sensor architecture depends heavily on the specific application and priorities.

Q 17. Describe different color filter array patterns (e.g., Bayer, CMYG).

Color Filter Arrays (CFAs) are patterns of color filters placed over the sensor’s photodiodes to capture color information. Each photodiode only measures the intensity of light, not its color. The CFA allows the sensor to infer color information from the surrounding pixels. Here are some common patterns:

Bayer Pattern: This is the most widely used CFA, consisting of a repeating pattern of red, green, and blue (RGB) filters. Green is typically sampled twice as frequently as red and blue to better match human vision’s sensitivity to green.

CMYKG Pattern: This pattern includes cyan, magenta, yellow, black, and green filters. It aims to improve color reproduction and dynamic range compared to the Bayer pattern, although it’s less common due to increased complexity in demosaicing.

Other Patterns: Other less common patterns exist, each with potential advantages and disadvantages concerning color reproduction and demosaicing complexity. Research continues to explore new CFA designs to optimize image quality.

The choice of CFA affects the accuracy and efficiency of the demosaicing algorithm, which is a critical step in image processing. The Bayer pattern, despite its limitations, has become a de facto standard due to its simplicity and effectiveness.

Q 18. Explain the concept of rolling shutter and global shutter.

Rolling shutter and global shutter are two different methods for reading out data from the image sensor. The difference lies in how the sensor captures the image over time.

Rolling Shutter: In a rolling shutter, the sensor reads out the data row by row, or column by column. Imagine a scanner reading a document. This method is faster and simpler to implement but can lead to image distortions, particularly with fast-moving objects (e.g., the ‘jello effect’ in video). The top of the image is captured before the bottom, so if the scene is moving, parts of the image will be slightly offset in time.

Global Shutter: In a global shutter, all pixels are exposed and read out simultaneously. Imagine taking a snapshot with a traditional camera. This eliminates motion distortion but is more complex and typically slower than a rolling shutter, making it less suitable for high-frame-rate applications.

Q 19. What are the advantages and disadvantages of rolling shutter vs. global shutter?

The choice between rolling and global shutter depends on the application’s requirements:

Rolling Shutter Advantages: Higher frame rates, lower cost, simpler implementation.

Rolling Shutter Disadvantages: Motion blur/distortion, ‘jello effect’ with fast movements.

Global Shutter Advantages: No motion distortion, accurate representation of fast-moving scenes.

Global Shutter Disadvantages: Lower frame rates, higher cost, more complex implementation.

For example, high-speed cameras often use rolling shutters for capturing many frames per second. In applications where accurate representation of motion is critical (e.g., robotics, industrial inspection), a global shutter is preferred, despite its limitations in frame rate.

Q 20. How do you measure the performance of an image sensor?

Measuring image sensor performance involves a variety of metrics, each targeting specific aspects of image quality. Here are some key measurements:

Quantum Efficiency (QE): Measures the percentage of incident photons that are converted into electrons. A higher QE indicates better light sensitivity.

Dynamic Range: The ratio between the maximum and minimum detectable signal levels. A wider dynamic range allows for capturing details in both bright and dark regions of a scene.

Signal-to-Noise Ratio (SNR): Measures the ratio of signal strength to noise level. A higher SNR indicates a cleaner, less noisy image.

Dark Current: The current generated by the sensor in the absence of light. High dark current contributes to noise.

Full Well Capacity (FWC): The maximum number of electrons a pixel can hold before saturation occurs.

Read Noise: Noise introduced during the readout process.

Linearity: How well the sensor’s output signal matches the input light intensity. Non-linearity can lead to inaccuracies in color reproduction and exposure.

Specialized equipment, like photometers and integrating spheres, is often used to objectively measure these parameters.

Q 21. What are some common image sensor specifications (e.g., dynamic range, SNR)?

Image sensor specifications are crucial for understanding the capabilities and limitations of a sensor. Here are some common specifications:

Resolution: Measured in megapixels (MP), indicating the number of pixels in the sensor array. Higher resolution allows for capturing more detail but may require more processing power.

Pixel Size: The physical size of individual pixels on the sensor. Larger pixels usually lead to better low-light performance.

Dynamic Range: Expressed in dB (decibels), indicating the range of light intensities the sensor can capture. A wider dynamic range allows for greater detail in both shadows and highlights.

Signal-to-Noise Ratio (SNR): Typically measured in dB, indicating the image’s clarity and freedom from noise. A higher SNR means less noise.

Sensitivity: Often expressed as ISO, indicating the sensor’s responsiveness to light. A higher ISO means greater sensitivity but potentially increased noise.

Frame Rate: The number of frames captured per second (fps). Important for video applications.

Shutter Type: Indicates whether the sensor uses a rolling shutter or a global shutter.

These specifications are crucial for selecting the right sensor for a specific application. For instance, a high-dynamic-range sensor would be preferred for landscape photography, while a high-frame-rate sensor would be better suited for capturing fast-moving objects.

Q 22. Explain the concept of temporal noise and spatial noise.

Noise in image sensors is broadly categorized into temporal and spatial noise. Think of it like this: temporal noise is noise that changes over time, while spatial noise is noise that’s fixed in location across the sensor.

Temporal Noise: This type of noise varies from frame to frame. A primary source is shot noise, which arises from the inherent randomness in the generation and collection of photons. The more photons you collect (brighter scenes), the higher the signal-to-noise ratio, but shot noise is always present. Another contributor is dark current noise, stemming from thermally generated electrons in the sensor even without light. This increases significantly with temperature. Read noise, associated with the amplification process of the signal, is also a temporal component as it affects each readout.

Spatial Noise: This noise is fixed in location on the sensor, meaning it remains consistent across frames. Fixed pattern noise (FPN) is a classic example – defects in the sensor itself cause some pixels to consistently output different values than others. PRNU (Photo Response Non-Uniformity) is another spatial noise source, where each pixel shows a different sensitivity to light even after correction for FPN. Imagine a slightly smudged paintbrush creating a consistent blotch across a canvas – each brushstroke is a pixel and this smudge is the spatial noise pattern.

Understanding these noise types is crucial for image processing and sensor design. For instance, algorithms like dark current subtraction or sophisticated noise filtering techniques need to be tailored to the specific noise characteristics.

Q 23. What are some common image sensor defects and how are they corrected?

Image sensor defects are unfortunately common. They can dramatically impact image quality. Let’s explore a few:

- Dead Pixels: Pixels that consistently output no signal (black). These can be identified during sensor testing and software can usually interpolate their values based on neighboring pixels.

- Stuck Pixels: Pixels that consistently output a maximum signal (bright white). Similar to dead pixels, they’re identified and corrected using interpolation or algorithms that recognize this saturation pattern.

- Hot Pixels: Pixels with excessively high signals; essentially an amplified version of dark current noise in one specific location. These can be mapped and compensated for during processing.

- Column/Row Defects: Entire columns or rows of pixels may malfunction, resulting in vertical or horizontal bands of noise. These are harder to compensate for entirely, potentially requiring advanced algorithms and sometimes even discarding affected regions.

Correction techniques vary in complexity. Simple methods involve interpolation, substituting the defective pixel value with an average of its neighbors. More advanced techniques may involve using a statistical model of the defect pattern to improve accuracy and reduce artefacts. These methodologies improve over time as algorithms and computational capabilities increase. Furthermore, calibration procedures during sensor manufacturing or initial use aim to identify and compensate for many of these defects.

Q 24. Describe your experience with different image sensor interfaces (e.g., MIPI, LVDS).

My experience spans various image sensor interfaces, including MIPI (Mobile Industry Processor Interface) and LVDS (Low Voltage Differential Signaling). MIPI is dominant in mobile applications due to its low power consumption and high data rates, allowing for efficient transmission of image data from the sensor to the image processor. I’ve worked extensively with MIPI CSI-2 (Camera Serial Interface-2), optimizing its configuration to achieve desired frame rates and resolutions for high-speed camera systems.

LVDS, while offering robust transmission, tends to consume more power than MIPI. I’ve encountered it more in industrial applications where high-bandwidth and noise immunity are prioritized over power efficiency. This interface requires careful impedance matching and signal integrity management to avoid data corruption. For example, in a project involving a high-resolution machine vision system, we carefully designed the LVDS cabling and circuit boards to meet the stringent specifications required for stable operation.

I’m also familiar with other interfaces such as Parallel Camera Interface (PCI) and its various implementations, though their use has become less prevalent due to the advantages offered by serial interfaces like MIPI and LVDS.

Q 25. Discuss your experience with image sensor testing and characterization.

Image sensor testing and characterization is a critical aspect of my work, crucial for verifying sensor performance and ensuring product quality. The process typically involves several steps:

- Dark Current Measurement: Measuring the signal output of the sensor in complete darkness to quantify dark current noise at different temperatures.

- Fixed Pattern Noise (FPN) Measurement: Identifying and quantifying spatially fixed noise patterns.

- Photo Response Non-Uniformity (PRNU) Measurement: Assessing variations in pixel response to uniform illumination.

- Linearity Measurement: Evaluating the sensor’s response to varying light intensities to ensure a linear relationship between input and output.

- Quantum Efficiency (QE) Measurement: Determining the sensor’s efficiency in converting incident photons into electrical signals.

- Spatial Resolution Measurement: Assessing the sensor’s ability to resolve fine details.

Characterization involves using specialized equipment such as integrating spheres, calibrated light sources, and dedicated software for automated testing and data analysis. I’ve used various software tools for automating the tests and analyzing data to generate comprehensive performance reports that help in sensor selection and system optimization. We always cross-check the acquired data with multiple measurement methods to minimize systematic errors.

Q 26. How do you choose the right image sensor for a specific application?

Choosing the right image sensor involves a careful consideration of several factors, tailored to the specific application. It’s like choosing the right tool for a job – a hammer isn’t ideal for screwing in a screw!

- Resolution: The number of pixels dictates the level of detail captured. Higher resolution is necessary for applications needing fine details, while lower resolution can suffice for simpler tasks.

- Sensitivity: How well the sensor performs in low-light conditions. Low-light applications need high-sensitivity sensors.

- Dynamic Range: The sensor’s ability to capture both bright and dark areas in a scene simultaneously. High dynamic range is beneficial in scenes with significant contrast variation.

- Frame Rate: The number of images captured per second. High frame rates are needed for applications like high-speed imaging or video recording.

- Interface: Choosing the appropriate interface (MIPI, LVDS, etc.) considering power budget, data bandwidth requirements, and the overall system architecture.

- Size and Form Factor: The physical dimensions of the sensor must be compatible with the intended system.

- Cost and Power Consumption: Balancing performance with budget and power limitations.

A detailed specifications sheet is crucial for comparison. For example, in selecting a sensor for a robotic vision application, we prioritized high speed and robust low-light performance, leading to the choice of a specific CMOS sensor with a high frame rate and excellent sensitivity.

Q 27. Explain your experience with image sensor modeling and simulation.

I have extensive experience in image sensor modeling and simulation, using tools like MATLAB and specialized software packages. Modeling helps us predict sensor performance before physical prototyping, reducing development time and costs. This involves creating detailed models that simulate the various physical processes within the sensor, including photon generation and collection, charge transfer, and readout noise.

For example, I once used sensor models to simulate the impact of different pixel architectures on the sensor’s performance under varying illumination conditions. The simulations provided invaluable insights into optimizing pixel design parameters to achieve a specific tradeoff between sensitivity and noise. These simulations help predict the sensor’s output under different conditions, including various lighting scenarios, lens effects, and even sensor defects. This allows us to perform virtual experiments and optimize system design parameters before physical tests, making the overall development more efficient.

Furthermore, simulations enable comparative analysis of different sensor technologies and architectures. For example, we might compare a global shutter sensor against a rolling shutter sensor to choose the best technology for motion-blur mitigation in a fast-paced application.

Q 28. Describe a challenging problem you solved related to image sensor technology.

One challenging problem involved a high-speed imaging system where the sensor was exhibiting significant image artifacts due to unforeseen crosstalk between adjacent pixels. Initially, we suspected faulty sensor manufacturing, but thorough testing ruled that out. The issue manifested only under specific high-frequency illumination conditions.

Our investigation revealed a previously undocumented issue related to the sensor’s analog signal processing circuitry. Through detailed analysis of the electrical characteristics and careful simulation using SPICE modeling, we pinpointed the source of the crosstalk to a specific capacitor value within the sensor’s design.

The solution involved a multi-faceted approach. First, we modified the image processing algorithm to perform a sophisticated signal deconvolution using the acquired model of the crosstalk to recover signal fidelity. Second, we collaborated with the sensor manufacturer to address the issue in future sensor revisions by adapting the capacitor’s value. This successful resolution improved image quality significantly, preventing further delays in the project. This underscores the importance of combined experimental validation and sophisticated model-based analysis in troubleshooting complex problems in image sensor technology.

Key Topics to Learn for Image Sensor Technology Interview

- Image Sensor Physics: Understand the fundamental principles behind photoelectric conversion, including the operation of various sensor types (CMOS, CCD) and their respective advantages and disadvantages. Explore concepts like quantum efficiency, fill factor, and dark current.

- Sensor Architectures: Familiarize yourself with different sensor architectures, such as Bayer filter, FSI (Front Side Illuminated), BSI (Back Side Illuminated), and their impact on image quality and performance. Consider the trade-offs between resolution, sensitivity, and speed.

- Image Signal Processing (ISP): Grasp the basics of image signal processing pipelines within the sensor and beyond. Understand concepts like analog-to-digital conversion (ADC), noise reduction techniques, color correction, and demosaicing algorithms.

- Practical Applications: Be prepared to discuss applications of image sensor technology across various industries, such as automotive (ADAS, autonomous driving), mobile devices, medical imaging, machine vision, and security systems. Consider specific use cases and the unique challenges they present.

- Sensor Calibration and Characterization: Understand the process of calibrating and characterizing image sensors to ensure accurate and reliable performance. Explore techniques for correcting lens distortion, vignetting, and other optical aberrations.

- Data Acquisition and Transfer: Familiarize yourself with different data acquisition and transfer protocols and interfaces used with image sensors. This includes understanding data rates, bandwidth requirements, and interface standards (e.g., MIPI CSI-2).

- Advanced Topics (for Senior Roles): Explore advanced concepts such as 3D image sensors, event-based vision, spectral imaging, and high-dynamic-range (HDR) imaging.

- Problem-Solving Approach: Practice applying your theoretical knowledge to solve practical problems. Consider how you would troubleshoot common image sensor issues and design solutions to improve image quality or performance.

Next Steps

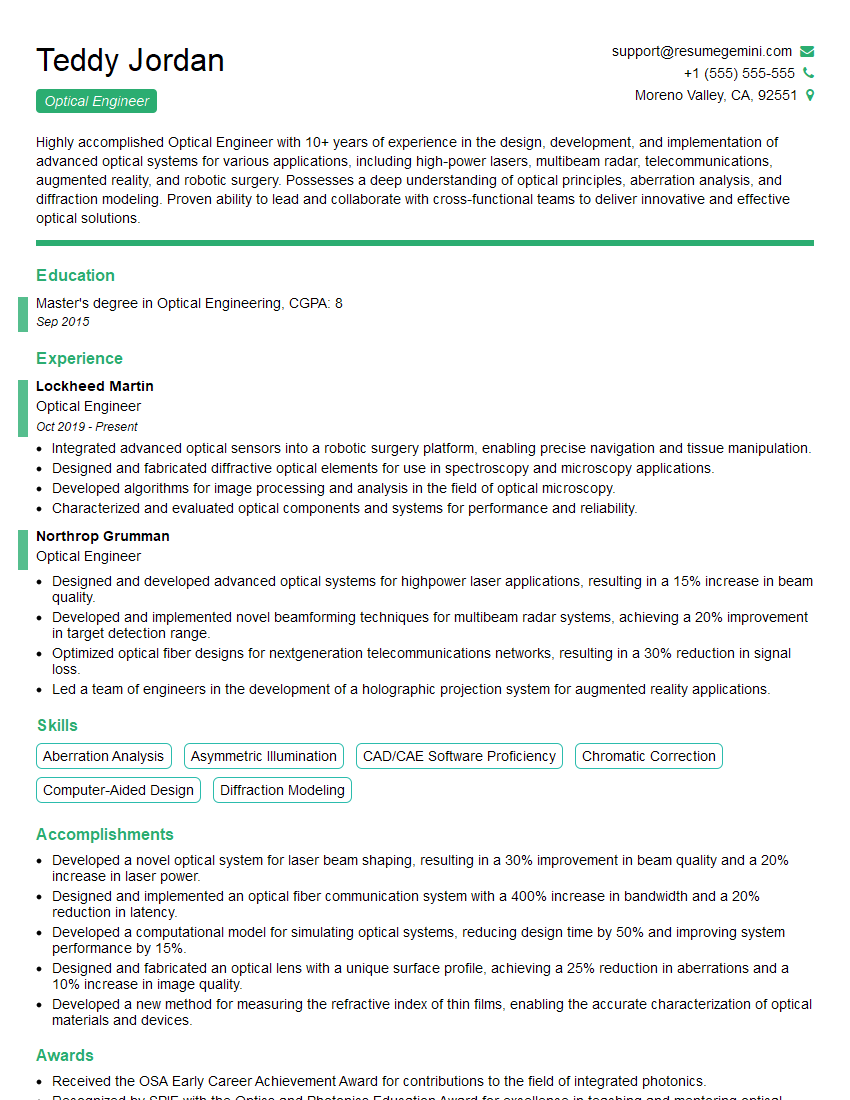

Mastering Image Sensor Technology opens doors to exciting and rewarding careers in a rapidly evolving field. Demonstrating a strong understanding of these concepts is crucial for securing your dream role. To maximize your chances of success, focus on building a compelling and ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you create a professional resume tailored to the specific requirements of Image Sensor Technology roles. Examples of resumes optimized for this field are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good