Unlock your full potential by mastering the most common Processor Architecture interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Processor Architecture Interview

Q 1. Explain the difference between a microarchitecture and a macroarchitecture.

Imagine building a house. The macroarchitecture is the blueprint – it defines the overall structure, the number of rooms (registers), the layout (instruction set), and the connections between them (buses). It’s the high-level view, what the user interacts with directly. The microarchitecture, on the other hand, is the actual construction process – how each component is built (pipelining, caching), how the workers (functional units) cooperate, and the specific techniques used to optimize the construction speed and efficiency. It’s the low-level implementation details hidden from the user. Different microarchitectures can implement the same macroarchitecture, just like multiple construction crews can build the same house design, each with its own methods.

For example, the x86 instruction set architecture (ISA) is a macroarchitecture. Intel’s Core i7 and AMD’s Ryzen processors both implement the x86 ISA (same macroarchitecture), but they employ vastly different internal designs (different microarchitectures) to achieve performance.

Q 2. Describe the different stages of a typical CPU pipeline.

A typical CPU pipeline is like an assembly line for instructions. Each stage performs a specific task. A common pipeline includes:

- Instruction Fetch (IF): Retrieves the next instruction from memory.

- Instruction Decode (ID): Decodes the fetched instruction, determining its type and operands.

- Execute (EX): Performs the actual operation, such as arithmetic or logical operations.

- Memory Access (MEM): Accesses memory if the instruction requires it (e.g., load or store).

- Write Back (WB): Writes the result of the operation back to a register.

Think of it like making a sandwich: IF gets the bread and fillings, ID checks what type of sandwich it is, EX assembles it, MEM gets extra ingredients (if needed) from the fridge, and WB puts the finished sandwich on the plate.

Q 3. What are the trade-offs between different cache memory organizations?

Cache memory organization involves trade-offs between speed, size, and cost. Different organizations exist, such as direct-mapped, set-associative, and fully associative caches.

- Direct-mapped: Simplest and cheapest. Each memory block has only one possible cache location. High speed but suffers from high conflict misses if multiple blocks map to the same location.

- Set-associative: A compromise between direct-mapped and fully associative. Each memory block can map to a set of cache locations (e.g., 2-way, 4-way). Offers better miss rate than direct-mapped but is more complex and expensive.

- Fully associative: Any memory block can be placed in any cache location. Best miss rate but most complex and expensive due to the need for a complex comparison process to find a block.

The choice depends on the application. For cost-sensitive applications, a direct-mapped cache might suffice. For performance-critical applications, a set-associative or even fully associative cache may be necessary despite the higher cost.

Q 4. Explain the concept of branch prediction and its importance.

Branch prediction is a technique that tries to guess which branch will be taken in a conditional statement (e.g., if, else) before the condition is evaluated. This allows the CPU to start fetching instructions from the predicted branch, minimizing pipeline stalls. It’s like predicting the outcome of a coin toss before it lands to avoid wasting time waiting.

Its importance lies in improving performance, particularly in programs with many conditional branches. Accurate branch prediction significantly reduces the number of pipeline stalls and wasted cycles, leading to faster execution. However, inaccurate predictions lead to performance penalties as the CPU must discard fetched instructions and fetch the correct ones. Advanced branch prediction algorithms use historical data and various heuristics to improve accuracy.

Q 5. How does out-of-order execution improve performance?

Out-of-order execution allows the CPU to execute instructions in an order different from the program’s sequential order. It exploits instruction-level parallelism (ILP) by executing instructions as soon as their operands are available, regardless of their position in the instruction stream. It’s like preparing multiple parts of a meal concurrently instead of strictly one step at a time.

For instance, if instruction 3 depends on the result of instruction 1, but instruction 2 is independent, out-of-order execution can execute instruction 2 while waiting for instruction 1 to complete. This avoids idle time and significantly improves performance, especially in complex programs with significant data dependencies.

Q 6. What are the advantages and disadvantages of superscalar processors?

Superscalar processors can execute multiple instructions simultaneously using multiple execution units. This increases instruction throughput, leading to higher performance.

- Advantages: Higher performance, better utilization of resources, improved throughput.

- Disadvantages: Increased complexity in design and manufacturing, higher power consumption, potential for increased complexity in instruction scheduling and resource allocation, potentially more expensive.

The effectiveness of superscalar processors is highly dependent on the ability to find and exploit instruction-level parallelism (ILP) in the program. Complex instruction scheduling and resource allocation are crucial for efficient utilization of multiple execution units.

Q 7. Explain the concept of Instruction Level Parallelism (ILP).

Instruction-level parallelism (ILP) refers to the ability to execute multiple instructions simultaneously or concurrently. This parallelism can be exploited by techniques like pipelining, superscalar execution, and out-of-order execution. It’s like having multiple cooks work on different parts of a meal at the same time to finish it quicker.

High ILP in a program means that there are many opportunities for parallel execution. Compilers and CPU architectures employ various techniques to detect and exploit ILP, significantly improving performance. However, the degree of ILP varies depending on the program and its structure. Programs with many data dependencies often have lower ILP than those with many independent instructions.

Q 8. Describe different types of memory hierarchies and their access times.

Memory hierarchy is a layered structure of storage devices in a computer system, organized by speed and cost. Faster, more expensive memory resides closer to the CPU, while slower, cheaper memory is further away. This hierarchy aims to provide fast access to frequently used data while maintaining sufficient storage capacity.

- Registers: The fastest memory, located directly within the CPU. They hold data actively being processed. Access time is typically a single clock cycle.

- Cache: Small, fast memory located between the CPU and main memory. It’s organized into levels (L1, L2, L3), with L1 being the fastest and smallest, and L3 being the slowest and largest. Access times range from a few clock cycles (L1) to tens of clock cycles (L3).

- Main Memory (RAM): Larger and slower than cache, it holds the currently running programs and data. Access times are in the tens to hundreds of nanoseconds.

- Secondary Storage (Hard Disk Drive/Solid State Drive): The slowest and largest memory, used for long-term storage. Access times range from milliseconds to tens of milliseconds. This is significantly slower than main memory.

Imagine a library: Registers are like the books you’re actively reading on your desk, cache is your bookshelf with frequently accessed books, main memory is the entire library, and secondary storage is the vast national archive.

Q 9. What are the challenges in designing high-performance memory systems?

Designing high-performance memory systems presents several significant challenges:

- Latency: The time it takes to access data is a major bottleneck. Reducing latency requires faster memory technologies, optimized memory controllers, and efficient memory hierarchy management.

- Bandwidth: The amount of data that can be transferred per unit of time is crucial. Improving bandwidth involves wider memory buses, faster data transfer rates, and parallel access techniques.

- Power Consumption: High-speed memory components consume significant power. Minimizing power consumption requires careful design of memory circuits, low-power memory technologies, and efficient power management techniques.

- Cost: Faster and higher-capacity memory is expensive. Balancing performance, cost, and power consumption is a key design trade-off.

- Scalability: As processors become more powerful, the demand for memory bandwidth and capacity increases. Designing memory systems that can scale to meet future demands is critical.

For example, consider the challenge of designing memory for a high-performance gaming console. The console needs fast access to textures and game data, requiring high bandwidth and low latency, all while keeping power consumption under control to prevent overheating.

Q 10. Explain the concept of virtual memory and its benefits.

Virtual memory is a memory management technique that provides an illusion of having more physical memory (RAM) than is actually available. It achieves this by using a portion of the hard drive as an extension of RAM. This ‘virtual address space’ is much larger than the physical address space.

- Larger Address Space: Allows programs larger than available RAM to run.

- Memory Protection: Isolates processes from each other, preventing one program from corrupting another’s memory.

- Efficient Memory Usage: Only loads necessary parts of a program into RAM, freeing up space for other tasks.

Think of it as a massive library catalog. The catalog (virtual memory) lists all the books, even those not currently on the shelves (physical RAM). When you need a book, the librarian (the operating system) retrieves it from storage (hard drive).

Q 11. How does paging work in virtual memory management?

Paging is a memory management scheme used in virtual memory. The physical memory and virtual address space are divided into fixed-size blocks called ‘pages’ and ‘page frames’, respectively. Each page in virtual memory corresponds to a page frame in physical memory.

When a program needs to access a specific memory location (a virtual address), the system translates the virtual address into a physical address using a ‘page table’. If the required page is in RAM (in a page frame), the access is fast. If not (a ‘page fault’), the operating system loads the page from the secondary storage into a free page frame in RAM.

Virtual Address -> Page Table -> Physical Address

This process ensures that only the necessary parts of a program reside in RAM at any given time, improving efficiency.

Q 12. Describe different cache coherence protocols.

Cache coherence protocols ensure that multiple processors or cores in a multiprocessor system have a consistent view of the data in shared cache. Inconsistencies can lead to data corruption and unpredictable behavior.

- Snooping Protocols: Each cache monitors (snoops) the memory bus for write operations performed by other caches. If a cache detects a write to a data block it also holds, it invalidates its copy or updates it.

- Directory-Based Protocols: A central directory keeps track of which caches hold copies of each cache block. When a write occurs, the directory informs the relevant caches to update or invalidate their copies.

Snooping is simpler to implement but doesn’t scale well to large systems. Directory-based protocols offer better scalability but are more complex.

Imagine a shared whiteboard in a collaborative office. Snooping is like everyone watching each other to see if someone changes something on the board. Directory-based is like having a central coordinator who announces changes.

Q 13. What are the different types of interrupts and how are they handled?

Interrupts are signals that temporarily halt the normal execution of a program to handle an event requiring immediate attention. They are crucial for handling asynchronous events.

- Hardware Interrupts: Generated by hardware devices like keyboards, mice, or network cards. For example, pressing a key generates a hardware interrupt.

- Software Interrupts: Generated by software instructions, often used for system calls or exceptional conditions (e.g., division by zero).

- Exception Interrupts: Triggered by exceptional events within the CPU, like arithmetic overflow or a page fault.

When an interrupt occurs, the CPU saves the current state of the executing program, determines the source of the interrupt, and executes an interrupt handler routine to address the event. Once the handler completes, the CPU restores the saved state and resumes the interrupted program.

Q 14. Explain the concept of pipelining hazards and how they are resolved.

Pipelining in a processor improves instruction execution speed by overlapping the execution of multiple instructions. However, certain situations, called hazards, can disrupt the pipeline’s smooth flow.

- Data Hazards: Occur when an instruction needs data that has not yet been produced by a preceding instruction. For example, an instruction adding two registers needs the second register to have the result calculated by another instruction before this one executes.

- Control Hazards: Arise from branch instructions (like ‘if’ statements) where the next instruction to execute isn’t known until the branch condition is evaluated.

- Structural Hazards: Occur when two instructions require the same hardware resource simultaneously.

Hazards are resolved through techniques like:

- Data Forwarding: Moving data directly from one pipeline stage to another to avoid delays.

- Stalling (or Bubble Insertion): Inserting idle cycles (bubbles) into the pipeline to allow dependent instructions to complete.

- Branch Prediction: Speculatively executing instructions along the predicted path of a branch instruction. If the prediction is incorrect, the pipeline needs to be flushed.

Imagine an assembly line. Data hazards are like a worker needing a part that hasn’t been built yet. Control hazards are like a worker needing to wait for instructions on which part to build next. Structural hazards are like two workers trying to use the same tool simultaneously. Techniques to handle hazards would be similar to having alternate tools, having a buffer of parts, or having a plan for which part to build next.

Q 15. What are the different types of instruction sets (e.g., RISC, CISC)?

Instruction set architectures (ISAs) define the set of instructions a processor understands. Two primary types are RISC (Reduced Instruction Set Computing) and CISC (Complex Instruction Set Computing).

- RISC: Emphasizes a smaller, simpler set of instructions, each executed quickly. This leads to efficient pipelining and simpler hardware. Examples include ARM and MIPS architectures commonly found in smartphones and embedded systems.

- CISC: Features a larger, more complex set of instructions, with some instructions performing multiple operations. This can lead to potentially shorter programs but often requires more complex and slower hardware. The x86 architecture used in most personal computers is a classic example of CISC.

Other less common ISAs include VLIW (Very Long Instruction Word), which executes multiple operations simultaneously within a single instruction, and EPIC (Explicitly Parallel Instruction Computing), which offers enhanced parallelism through explicit instruction dependencies.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Discuss the impact of different instruction set architectures on performance.

The impact of ISA on performance is significant. RISC’s simplicity allows for faster clock speeds, efficient pipelining, and simpler hardware, leading to generally faster execution of individual instructions. However, because RISC instructions are simpler, more instructions might be needed to perform a given task compared to CISC.

CISC’s complex instructions can reduce the number of instructions needed for a task, potentially leading to smaller program sizes. But the complexity of the instructions necessitates more complex hardware, leading to potentially slower clock speeds and more difficult pipelining.

The choice between RISC and CISC involves a trade-off between instruction count and clock speed. Modern processors often incorporate techniques from both approaches, for example, using techniques like micro-operations to break down CISC instructions into smaller, simpler RISC-like steps.

Q 17. Explain the concept of Amdahl’s Law and its relevance to parallel processing.

Amdahl’s Law states that the overall performance improvement achievable by applying a specific optimization is limited by the fraction of the system that can benefit from that optimization.

Imagine you have a program where 90% of its execution time is spent on a part that can be parallelized, and 10% is sequential. Even if you could make the parallelizable part infinitely fast, the overall program speedup would be limited to a factor of 10 (1 / (0.1 + 0.9/∞) ≈ 10). This highlights the importance of minimizing the sequential portion of a program to achieve significant performance gains through parallelization.

In parallel processing, Amdahl’s Law emphasizes that even with many cores, the speedup is bounded by the serial portion. To maximize the benefits of parallel processing, the algorithm and application design must minimize the sequential parts.

Q 18. What are the challenges in designing multi-core processors?

Designing multi-core processors presents several challenges:

- Inter-core communication: Efficiently sharing data and coordinating operations between cores is crucial. Poor communication can create bottlenecks, negating the benefits of multiple cores. On-chip interconnects like ring buses or mesh networks are critical design considerations.

- Power consumption: Increasing the number of cores significantly increases power consumption, leading to heat dissipation problems and reduced battery life in mobile devices. Power management strategies are essential.

- Cache coherence: Ensuring that all cores see a consistent view of data stored in the cache memory is crucial. Protocols like MESI (Modified, Exclusive, Shared, Invalid) are used to maintain cache coherence, but they add complexity and overhead.

- Thermal management: Higher core counts generate more heat, demanding sophisticated cooling solutions to prevent overheating and performance throttling.

- Software development: Writing parallel programs that effectively utilize multiple cores is significantly more challenging than writing single-threaded programs. Programmers need to be mindful of concurrency issues, synchronization, and data dependencies.

Q 19. Explain different techniques for power management in processors.

Power management in processors is critical for extending battery life and reducing heat generation. Techniques include:

- Clock gating: Turning off clock signals to inactive parts of the processor to reduce power consumption.

- Voltage scaling: Reducing the supply voltage to decrease power consumption, though this usually results in a reduced clock speed.

- Dynamic voltage and frequency scaling (DVFS): Adjusting both voltage and frequency dynamically based on workload demands, balancing performance and power consumption.

- Power gating: Completely shutting down parts of the processor that are not needed.

- Thermal throttling: Reducing clock speed or voltage when the processor temperature exceeds a certain threshold to prevent overheating.

Many modern processors employ a combination of these techniques, often adapting dynamically to changing workloads.

Q 20. Describe different methods for improving processor performance.

Improving processor performance involves various strategies:

- Increasing clock speed: A straightforward approach, but it’s limited by power consumption and heat dissipation.

- Improving instruction-level parallelism (ILP): Techniques like pipelining, superscalar execution, and out-of-order execution can allow multiple instructions to be processed concurrently.

- Using larger caches: Reducing memory access time by storing frequently accessed data in faster on-chip caches.

- Improving branch prediction: Accurately predicting the outcome of branches can avoid pipeline stalls.

- Employing multi-core architectures: Distributing the workload across multiple cores to improve overall throughput.

- Using specialized hardware units: Incorporating dedicated units for specific tasks, like vector processing or floating-point arithmetic, to accelerate performance.

The most effective approach often involves a combination of these techniques.

Q 21. What are the trade-offs between performance and power consumption in processor design?

There’s an inherent trade-off between performance and power consumption in processor design. Higher clock speeds and more complex architectures generally lead to better performance but significantly increase power consumption and heat generation. This trade-off necessitates careful design choices, often involving compromises. For example, a high-performance server processor might prioritize performance even if it consumes more power, while a mobile processor needs to balance performance with battery life, demanding aggressive power-saving techniques.

Designers use various techniques like DVFS to dynamically adjust performance and power consumption according to the workload. The optimal balance depends heavily on the target application and the constraints of the system.

Q 22. Explain the concept of thermal design power (TDP).

Thermal Design Power (TDP) represents the maximum amount of heat a processor is expected to generate under typical usage conditions. It’s a crucial metric for system designers, determining the necessary cooling solution (e.g., heatsink, fan, liquid cooling). Think of it like the recommended wattage for a lightbulb; exceeding it risks overheating and damage. A processor with a TDP of 65W needs a cooling system capable of dissipating at least 65 Watts of heat. Manufacturers test processors under specific workloads to determine this value, and it influences the overall system design, affecting factors like case size, fan noise, and power supply requirements. For example, a high-end gaming PC with a processor boasting a 200W TDP will necessitate a robust cooling setup compared to a low-power embedded system with a 5W TDP processor.

Q 23. How does the design of a processor affect its security?

Processor design significantly impacts its security. Vulnerabilities often arise from design flaws or unintended consequences of architectural choices. For instance, a processor design that lacks robust memory protection mechanisms might allow malicious code to overwrite critical system areas. The infamous Spectre and Meltdown vulnerabilities exploited flaws in branch prediction and speculative execution, functionalities designed for performance optimization but susceptible to attacks. Similarly, a processor’s instruction set and microarchitectural features directly influence what attacks are possible. A processor with weak encryption primitives or insufficient isolation between different processes is more vulnerable to exploits. Secure processor design requires a multi-faceted approach, carefully considering not just performance but also security aspects at every stage of the design process.

Q 24. Discuss various techniques used for security in processor design.

Numerous techniques enhance processor security. These include:

- Memory Protection Units (MPUs): MPUs provide hardware-enforced memory access control, preventing unauthorized code from accessing sensitive memory regions. This is a fundamental aspect of securing operating systems.

- Secure Boot: Ensures that only authorized code is loaded during the boot process, preventing malware from gaining early control of the system.

- Trusted Execution Environments (TEEs): TEEs create isolated, secure areas within the processor where sensitive code can execute without fear of compromise from other processes, even malicious ones (e.g., Intel SGX, AMD SEV).

- Hardware-assisted virtualization: Isolates multiple virtual machines from each other and from the hypervisor, improving security in virtualized environments.

- Advanced encryption capabilities: Integrating dedicated hardware support for cryptographic operations speeds up encryption/decryption, and can safeguard cryptographic keys better than relying on solely software-based implementations.

- Side-channel attack mitigation: Reducing or eliminating information leakage through side channels like power consumption or electromagnetic emissions is crucial in preventing sophisticated attacks.

Many modern processors employ a combination of these techniques to create a layered security approach. No single method provides complete security, but layering defenses significantly increases the difficulty for attackers.

Q 25. Describe the role of a memory controller in a processor system.

The memory controller is a crucial component within or closely coupled with a processor, managing communication between the CPU and main memory (RAM). It acts as a bridge, optimizing data transfer to maximize system performance. Its functions include:

- Managing memory access: The controller arbitrates requests from the CPU for reading or writing data to memory.

- Error detection and correction: It often implements error-checking mechanisms (like parity or ECC) to ensure data integrity.

- Memory timing control: It coordinates the timing of memory operations to achieve optimal performance.

- Memory bandwidth management: The controller works to efficiently utilize available memory bandwidth by scheduling and prioritizing memory requests.

- Dynamic memory allocation: In some systems, the controller assists in dynamically allocating memory to different processes.

A well-designed memory controller is vital for overall system responsiveness and throughput, particularly in memory-intensive applications like databases or scientific computing. Poor memory controller design can lead to significant performance bottlenecks.

Q 26. Explain the concept of cache replacement policies (LRU, FIFO, etc.).

Cache replacement policies determine which cache line to evict when a new line needs to be brought in and the cache is full. Several common policies exist:

- Least Recently Used (LRU): Evicts the cache line that hasn’t been accessed for the longest time. This generally performs well as it assumes recently accessed data is more likely to be accessed again.

- First-In, First-Out (FIFO): Evicts the oldest cache line regardless of its recent usage. Simple to implement but can perform poorly if access patterns exhibit locality.

- Random Replacement (RR): Evicts a randomly chosen cache line. Simple but often less efficient than LRU or other sophisticated policies.

- Pseudo-LRU (PLRU): Approximates LRU using a smaller, more efficient data structure. This is a common compromise between performance and complexity.

The choice of policy affects cache hit rates and thus impacts overall system performance. LRU is often preferred for its effectiveness, though more complex policies, sometimes incorporating hints from the hardware or software, can offer further improvements.

Q 27. What are the benefits and drawbacks of using a SIMD instruction set?

Single Instruction, Multiple Data (SIMD) instruction sets allow a single instruction to operate on multiple data elements simultaneously. This significantly speeds up computations involving arrays or vectors. For example, adding two arrays element-by-element can be dramatically faster with SIMD.

Benefits:

- Performance improvement: Significant speedup for parallel computations.

- Improved energy efficiency (often): Performing multiple operations with a single instruction can be more energy-efficient than performing multiple scalar instructions.

Drawbacks:

- Programming complexity: Requires specialized programming techniques to effectively utilize SIMD instructions. Not all algorithms are easily parallelizable.

- Limited applicability: Not all types of computation benefit from SIMD. Sequential operations or those with complex dependencies might not see much improvement.

- Portability concerns: Code optimized for one SIMD architecture might not be easily portable to another.

SIMD is highly beneficial for applications like image processing, video encoding/decoding, scientific simulations, and machine learning where massive parallel computations are common. However, careful consideration is needed to determine its suitability for a given application, weighing performance gains against programming complexity and potential portability issues.

Q 28. Explain the difference between hardware and software solutions for exception handling.

Exception handling, the process of responding to exceptional events (e.g., division by zero, memory access violation), can be implemented in hardware or software.

Hardware solutions: The processor itself directly detects and handles exceptions. When an exception occurs, the hardware immediately interrupts the current execution flow, saves the processor state, and jumps to a predefined exception handler routine. This is usually fast, but the types of exceptions handled are limited to those detected by the hardware. Examples include divide-by-zero exceptions or page faults.

Software solutions: The operating system or application code is responsible for handling exceptions. Software solutions can handle a wider range of exceptions than hardware, but they incur the overhead of context switching and potential performance penalties. They might trap certain conditions and execute user-defined exception handling code. This approach is more flexible but slower than hardware-based exception handling.

In practice, most systems employ a combination of hardware and software. Hardware quickly detects critical exceptions, while software handles less critical or more application-specific exceptions.

Key Topics to Learn for Processor Architecture Interview

- Instruction Set Architectures (ISA): Understanding different ISA types (RISC, CISC), their trade-offs, and how they impact performance and power consumption. Consider exploring specific ISAs like ARM or x86.

- Pipelines and Parallelism: Mastering concepts like instruction pipelining, superscalar execution, and out-of-order execution. Understand how these techniques improve performance and the challenges they present (e.g., hazards).

- Memory Hierarchy: Deep dive into caches (L1, L2, L3), virtual memory, and their impact on program performance. Be prepared to discuss cache replacement policies and memory management techniques.

- Cache Coherence and Consistency: Understand the challenges of maintaining data consistency in multi-core processors and the various protocols used to address these challenges (e.g., MESI protocol).

- Multi-core Architectures: Explore different multi-core designs, inter-core communication mechanisms, and the challenges of parallel programming. Consider concepts like NUMA and SMP architectures.

- Power Management Techniques: Discuss low-power design techniques and their importance in modern processors. Understand the trade-offs between performance and power consumption.

- Input/Output (I/O) Systems: Familiarize yourself with different I/O mechanisms, DMA, and their impact on overall system performance.

- Performance Evaluation and Benchmarking: Understand different performance metrics and how to analyze and interpret benchmark results. This is crucial for practical application of theoretical knowledge.

Next Steps

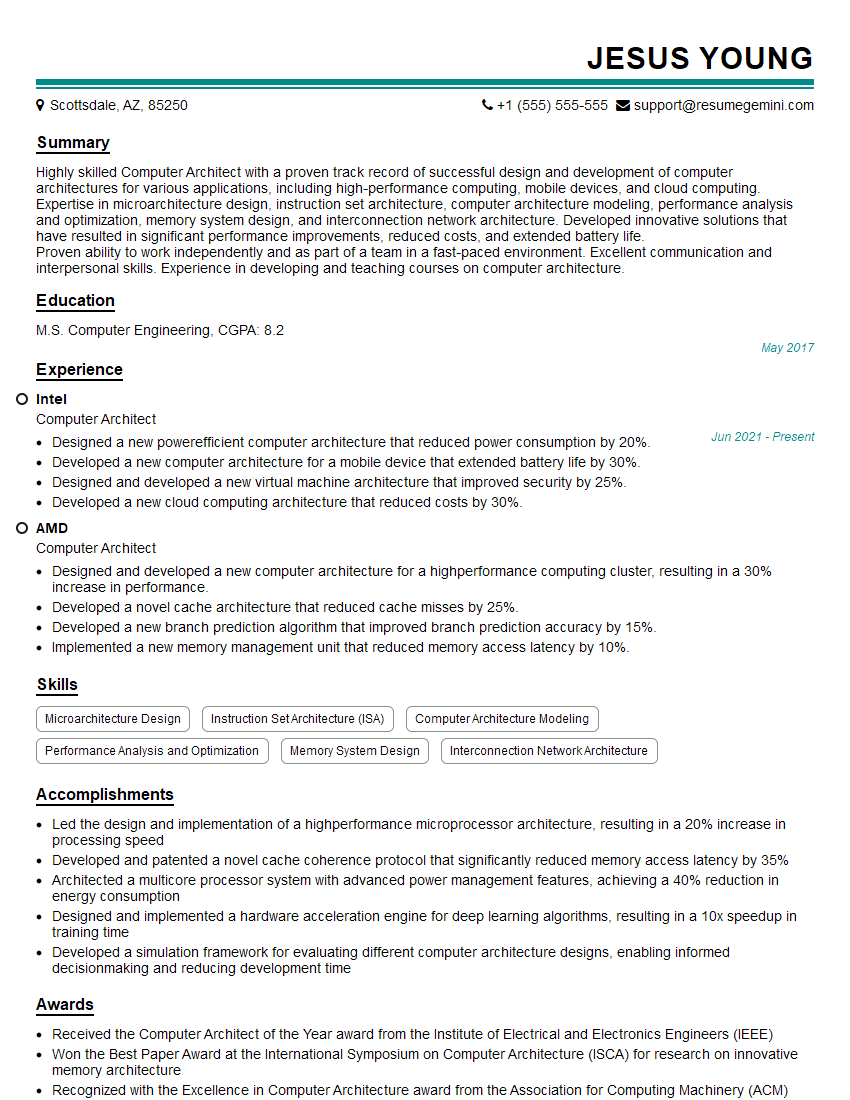

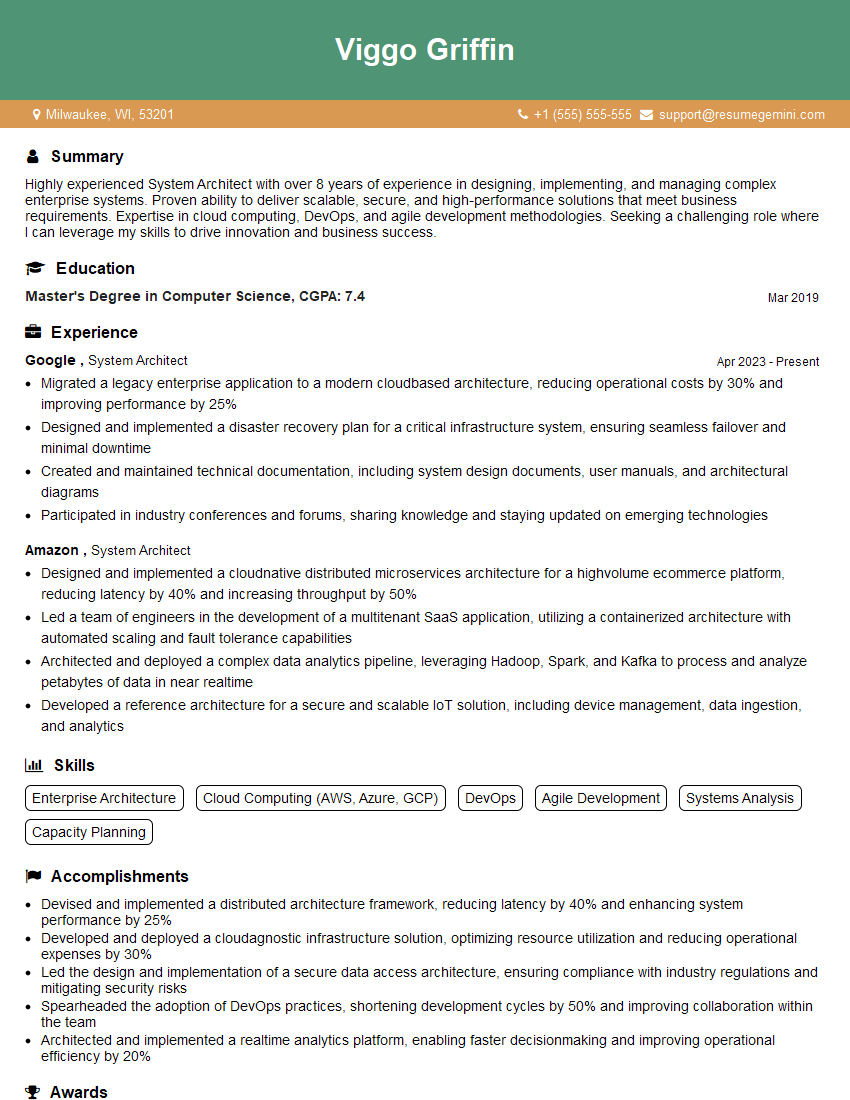

Mastering processor architecture is crucial for a successful career in high-performance computing, embedded systems, and many other related fields. A strong understanding of these concepts will significantly enhance your problem-solving abilities and technical expertise. To increase your chances of landing your dream role, it’s vital to present your skills effectively. Creating an ATS-friendly resume is key. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your expertise in Processor Architecture. Examples of resumes tailored to this specialization are available to help you get started. Take the next step towards your career goals today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good