Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Proficient in using Python or other programming languages for scripting and automation interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Proficient in using Python or other programming languages for scripting and automation Interview

Q 1. Explain the difference between `==` and `is` in Python.

In Python, both == and is are used for comparison, but they operate differently. == checks for equality in value, while is checks for equality in object identity (memory location).

Think of it like this: == asks, “Do these things have the same contents?” is asks, “Are these things the *exact same thing* in memory?”

Example:

list1 = [1, 2, 3]list2 = [1, 2, 3]list3 = list1print(list1 == list2) # Output: True (same values)print(list1 is list2) # Output: False (different objects)print(list1 is list3) # Output: True (same object)The first comparison using == returns True because both lists contain the same elements. However, is returns False because they are distinct objects residing in separate memory locations. The last comparison shows that list1 and list3, which point to the same object, evaluate to True with is.

In practice, you’ll usually use == for comparing values and reserve is for checking if two variables refer to the same object in memory – a situation that arises more frequently when dealing with immutable types like integers or strings or when explicitly working with object references.

Q 2. What are list comprehensions and how are they used?

List comprehensions offer a concise way to create lists in Python. They’re essentially shorthand for a for loop combined with conditional logic, all within a single line of code. This improves readability and reduces code verbosity.

Basic Structure:

new_list = [expression for item in iterable if condition]Where:

expressionis what’s applied to each item.itemrepresents each element in the iterable.iterableis the source sequence (e.g., a list, tuple, or range).condition(optional) filters items included in the new list.

Example: Let’s say we want to create a list of the squares of even numbers from 1 to 10:

even_squares = [x**2 for x in range(1, 11) if x % 2 == 0]print(even_squares) # Output: [4, 16, 36, 64, 100]This single line replaces a longer, equivalent for loop.

Real-world Application: List comprehensions are incredibly useful in data processing tasks, where you might need to filter, transform, and create new lists from existing datasets quickly and efficiently. For example, you could use them to clean up data, extract specific attributes, or prepare data for visualization or machine learning tasks.

Q 3. Describe different ways to handle exceptions in Python.

Python offers robust exception handling mechanisms to gracefully manage errors. The core structure uses try, except, else, and finally blocks.

1. Basic try-except: This handles a specific exception type.

try: # Code that might raise an exception result = 10 / 0except ZeroDivisionError: print("Cannot divide by zero!")2. Handling Multiple Exceptions: You can handle different exceptions separately.

try: file = open("myfile.txt", "r") # ... file operations ...except FileNotFoundError: print("File not found.")except IOError as e: print(f"An I/O error occurred: {e}")3. else Block: The else block executes if no exception occurs in the try block.

try: x = int(input("Enter a number: "))except ValueError: print("Invalid input.")else: print(f"You entered: {x}")4. finally Block: The finally block always executes, regardless of whether an exception occurred. This is ideal for cleanup actions (like closing files).

try: file = open("myfile.txt", "r") # ... file operations ...finally: file.close() # Ensures the file is closed even if an error occurred5. Custom Exceptions: You can define your own exception classes to handle specific application errors.

class InvalidInputError(Exception): pass # ...Effective exception handling is crucial for writing robust and reliable Python applications. It prevents unexpected crashes, providing informative error messages and allowing the program to recover gracefully or at least log the error for later debugging.

Q 4. What are generators in Python and how do they improve efficiency?

Generators are a special type of iterator in Python that produces values on-demand, rather than generating them all at once and storing them in memory. This makes them incredibly memory-efficient, especially when dealing with large datasets or infinite sequences.

How they improve efficiency: Generators don’t create the entire sequence upfront; they yield values one at a time as needed. This lazy evaluation prevents memory overload. Imagine processing a terabyte-sized log file; a generator would read and process each line individually, freeing the memory used by the previous line after it’s processed.

Creating Generators: Generators are defined using functions with the yield keyword instead of return.

def my_generator(n): for i in range(n): yield i**2Usage:

for x in my_generator(5): print(x) # Output: 0 1 4 9 16Each time the yield statement is encountered, the generator pauses, returning a value. When the generator is iterated upon again, execution resumes from where it left off. This makes them ideal for situations like streaming large files or processing infinite data streams where loading everything into memory is not feasible.

Real-world Application: Generators are commonly used in tasks involving large data processing, web scraping, and log file analysis where memory optimization is a critical consideration.

Q 5. Explain the concept of decorators in Python.

Decorators provide a way to modify or enhance functions and methods in Python without directly changing their source code. They’re a powerful feature that promotes code reusability and readability.

Basic Structure:

@my_decoratordef my_function(): # ... function code ...my_decorator is a function that takes another function (my_function) as input and returns a modified version of that function. The @ symbol is syntactic sugar that makes the decorator application concise.

Example: Let’s create a decorator that measures the execution time of a function:

import timedef measure_time(func): def wrapper(*args, **kwargs): start_time = time.time() result = func(*args, **kwargs) end_time = time.time() print(f"Execution time: {end_time - start_time} seconds") return result return wrapper@measure_timedef my_slow_function(): time.sleep(2) return 10my_slow_function()The measure_time decorator wraps my_slow_function, adding timing functionality without modifying my_slow_function‘s code directly. This promotes cleaner and more organized code.

Real-world Applications: Decorators are frequently used for tasks such as logging, access control, input validation, caching, and instrumentation (like adding performance monitoring).

Q 6. How do you handle asynchronous operations in Python?

Handling asynchronous operations in Python is essential for building responsive and efficient applications, especially those involving I/O-bound tasks (like network requests or file operations). Python’s asyncio library is the primary tool for this.

Key Concepts:

asyncandawaitkeywords: These define asynchronous functions (coroutines) and pause execution until an awaited operation completes.- Event Loop: The core of

asyncio; it manages the execution of asynchronous tasks concurrently. - Tasks and Futures: Represent asynchronous operations; tasks are scheduled on the event loop, while futures hold the results.

Example: Let’s make two asynchronous network requests using aiohttp:

import asyncioimport aiohttpasync def fetch_data(session, url): async with session.get(url) as response: return await response.textasync def main(): async with aiohttp.ClientSession() as session: task1 = asyncio.create_task(fetch_data(session, "https://example.com")) task2 = asyncio.create_task(fetch_data(session, "https://google.com")) result1, result2 = await asyncio.gather(task1, task2) print("Data from example.com:", result1) print("Data from google.com:", result2)asyncio.run(main())This code concurrently fetches data from two websites without blocking. asyncio.gather efficiently manages multiple asynchronous operations.

Real-world applications: Asynchronous programming is vital for building web servers, network clients, and applications that need to handle multiple concurrent operations without blocking the main thread.

Q 7. What are some common Python libraries for automation?

Python boasts a rich ecosystem of libraries for automation. The choice depends on the specific task, but here are some popular options:

requests: For making HTTP requests, crucial for web scraping and interacting with APIs.selenium: For automating web browsers; indispensable for tasks requiring interaction with dynamic web content.Beautiful Soup: For parsing HTML and XML, commonly used in conjunction withrequestsfor web scraping.pytest: A powerful testing framework to ensure your automation scripts are reliable and free of bugs.paramiko: For secure SSH connections, enabling remote server management and automation.pyautogui: For controlling the mouse and keyboard, suitable for GUI automation tasks.schedule: For scheduling tasks to run at specific times or intervals.subprocess: For executing shell commands; essential for integrating with other tools or systems.

Many other specialized libraries exist, catering to tasks like file manipulation, image processing, and database interactions. The right choice depends on the specific automation goals.

Q 8. Explain the use of regular expressions in Python for automation tasks.

Regular expressions, often shortened to regex or regexp, are powerful tools for pattern matching within text. In Python, the re module provides functions for working with regular expressions, making them invaluable for automation tasks involving text processing. Imagine you need to extract email addresses from a large log file – regex can do that efficiently and accurately.

For example, the pattern r'\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b' will match many common email formats. The re.findall() function would then return a list of all matches found in a given string.

Here’s a simple example:

import re

text = "My email is [email protected] and another is [email protected]"

emails = re.findall(r'\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b', text)

print(emails) # Output: ['[email protected]', '[email protected]']Beyond email extraction, regex is crucial for tasks like data cleaning (removing unwanted characters), data validation (ensuring data conforms to specific patterns), and parsing complex text structures (extracting key information from unstructured data such as logs or web pages).

Q 9. Describe your experience with version control systems like Git.

I have extensive experience using Git for version control in both individual and collaborative projects. I’m proficient in all the core Git commands – from basic operations like git add, git commit, and git push to more advanced features like branching, merging, rebasing, and resolving conflicts.

I’ve used Git extensively on projects involving multiple developers, where it’s been instrumental in managing code changes, tracking revisions, and facilitating seamless collaboration. I’m comfortable using platforms like GitHub, GitLab, and Bitbucket, understanding concepts such as pull requests, code reviews, and issue tracking. I often utilize branching strategies like Gitflow to manage feature development, bug fixes, and releases separately.

For example, in a recent project, we utilized Git’s branching capabilities to develop and test new features concurrently without interfering with the main codebase. This resulted in a more stable and robust final product. My experience includes resolving merge conflicts efficiently and effectively, ensuring that everyone’s contributions are integrated smoothly.

Q 10. How do you debug Python scripts effectively?

Debugging Python scripts effectively involves a multi-pronged approach. I begin by using the built-in print() statements strategically to track variable values and program flow. This is often the quickest way to identify simple errors. For more complex issues, I leverage Python’s interactive debugger, pdb (Python Debugger), which allows me to step through the code line by line, inspect variables, and set breakpoints.

pdb allows me to examine the program’s state at specific points, identify the source of errors, and understand why unexpected behavior occurs. I also frequently use IDE features like breakpoints, stepping through code, and variable inspection. These IDE tools provide a visual way to navigate the code and examine its state during execution, which can significantly improve debugging efficiency.

Beyond these tools, I rely on thoughtful code organization, using meaningful variable names, and writing well-documented code. This proactive approach makes debugging significantly easier. Finally, thorough testing, using frameworks like pytest or unittest (discussed later), helps prevent errors before they even arise.

Q 11. How would you approach automating a repetitive task using Python?

Automating a repetitive task in Python typically involves identifying the steps involved, structuring the code logically, and using appropriate libraries. I would first carefully analyze the task to understand exactly what needs to be automated. This includes identifying the input data, the required transformations, and the desired output.

Once the process is well-defined, I would use Python’s extensive libraries to perform the necessary tasks. For example, if the task involves file manipulation, I might use the os and shutil modules. If it requires interacting with a web service, I might use libraries like requests or BeautifulSoup. For data processing, pandas or NumPy are frequently used.

Let’s say the task is to rename a set of files according to a specific pattern. I would write a script using os.listdir() to get the file list, os.rename() to perform the renaming, potentially incorporating string formatting or regular expressions for the renaming pattern. The resulting script would execute efficiently, performing the repetitive task consistently and without manual intervention.

Q 12. Explain your experience with testing automation frameworks (e.g., pytest, unittest).

I have significant experience with Python’s testing frameworks, primarily pytest and unittest. unittest is Python’s built-in framework, offering a classic approach to testing with methods for setting up tests, running assertions, and organizing test cases into suites. It’s straightforward and well-integrated with Python.

pytest, on the other hand, is a more modern and feature-rich framework. I prefer pytest for its simplicity, flexibility, and extensive plugin ecosystem. Its concise syntax makes tests easier to write and read. The rich set of plugins adds functionality such as parameterization, fixtures, and mocking, significantly enhancing testing capabilities. I often use pytest for unit tests, integration tests, and end-to-end tests, ensuring comprehensive test coverage.

In a recent project, using pytest‘s parameterized tests allowed me to efficiently test a function with a wide range of inputs, covering various edge cases and boundary conditions that would have been tedious to manually test. The detailed reports generated by pytest significantly streamlined the debugging process and improved code quality.

Q 13. Describe your experience with CI/CD pipelines.

My experience with CI/CD pipelines involves using tools like Jenkins, GitLab CI, and GitHub Actions to automate the build, testing, and deployment processes. I understand the importance of continuous integration for maintaining a stable codebase and continuous delivery/deployment for rapidly releasing updates and features.

I have built and configured CI/CD pipelines for various projects, automating the process of building software from source code, running automated tests, and deploying the software to different environments (development, staging, production). I am familiar with concepts such as versioning, environment configuration, and rollback strategies.

In one project, implementing a CI/CD pipeline with automated testing reduced deployment time by over 75%, improved code quality through early error detection, and made the release process significantly more reliable. It’s all about automating the process to speed up deployments and reduce human error.

Q 14. How do you manage dependencies in your Python projects?

Managing dependencies in Python projects is crucial for ensuring reproducibility and avoiding conflicts. I primarily use pip, the package installer for Python, to manage dependencies. I meticulously specify dependencies in a requirements.txt file, which lists all the packages required by the project, including specific version numbers. This is best practice as it ensures that everyone working on the project (or even a new deployment) uses the exact same versions.

The requirements.txt file makes it easy to create consistent environments on different machines. Using pip freeze > requirements.txt will list all installed packages and their versions. Then, running pip install -r requirements.txt in a new environment will install the needed packages and versions. I strongly recommend creating virtual environments using venv or conda for isolating project dependencies, preventing conflicts between different projects.

For more complex dependency management, particularly in larger projects, I have used tools like poetry and conda which provide better control over dependency resolution and management than just using pip and requirements.txt. These offer features such as dependency locking, which ensures consistent reproducibility even as packages are updated.

Q 15. What are your preferred methods for deploying automated scripts?

Deploying automated scripts effectively involves choosing the right method based on factors like script complexity, environment, and security requirements. My preferred methods generally fall into these categories:

- Version Control (Git): This is foundational. I use Git (GitHub, GitLab, Bitbucket) to track changes, collaborate, and manage different versions of my scripts. This ensures reproducibility and allows for easy rollback if issues arise.

- Continuous Integration/Continuous Deployment (CI/CD): For larger projects or those requiring frequent updates, CI/CD pipelines (e.g., using Jenkins, GitLab CI, GitHub Actions, Azure DevOps) are crucial. These automate the build, testing, and deployment processes, ensuring consistent and reliable releases. A CI/CD pipeline might involve automated testing, code linting, and deployment to a staging environment before production.

- Containerization (Docker): Packaging scripts and their dependencies within Docker containers ensures consistent execution across different environments. This eliminates the ‘it works on my machine’ problem. Containers are easily deployed to various platforms, including cloud environments.

- Configuration Management Tools (Ansible, Puppet, Chef): These tools are excellent for managing and automating infrastructure and deployments, especially in larger-scale projects. They allow for declarative configuration, making it easier to manage and track changes across multiple servers.

- Scheduling (cron, Task Scheduler): For scripts that need to run regularly, I use system schedulers like

cron(Linux/macOS) or the Task Scheduler (Windows) to automate execution at specified intervals.

The choice of deployment method often depends on the project’s scale and complexity. For a small, one-off script, a simple scheduler might suffice. However, for a large, complex project, a robust CI/CD pipeline with containerization and configuration management is essential.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your understanding of different design patterns in Python.

Design patterns provide reusable solutions to common problems in software development. In Python, several patterns are particularly useful for automation:

- Singleton: Ensures only one instance of a class exists. Useful for managing resources like database connections or logging objects.

class Singleton: _instance = None def __new__(cls): if cls._instance is None: cls._instance = super(Singleton, cls).__new__(cls) return cls._instance - Factory: Creates objects without specifying their concrete classes. Useful when you have multiple types of objects that can be created based on configuration or input. For example, creating different types of report generators based on a user’s selection.

- Observer (Publisher-Subscriber): Allows objects to subscribe to events from other objects. Useful for event-driven automation, where actions are triggered by specific events, such as file changes or database updates.

- Template Method: Defines the skeleton of an algorithm in a base class, allowing subclasses to override specific steps. This is helpful for creating flexible automation workflows where some steps might vary based on specific tasks.

- Strategy: Defines a family of algorithms, encapsulates each one, and makes them interchangeable. Useful when you have multiple ways to achieve the same goal and want to switch between them easily. For example, different methods for data extraction from various sources.

Choosing the right design pattern improves code readability, maintainability, and extensibility, making it easier to adapt automation scripts to changing requirements.

Q 17. How do you ensure the scalability and maintainability of your automation scripts?

Scalability and maintainability are critical for long-term success in automation. Here’s how I ensure both:

- Modular Design: Break down complex scripts into smaller, reusable modules. This enhances readability, testability, and allows for easier modifications or updates to specific parts of the automation without affecting others.

- Code Documentation: Thorough documentation is vital. I use docstrings within the code and create separate documentation (e.g., using Sphinx) to explain the purpose, usage, and dependencies of each module and the overall system.

- Version Control (Git): Tracking changes using Git is essential for managing updates, reverting to previous versions if needed, and collaborating with others.

- Consistent Coding Style: Adhering to a consistent style guide (e.g., PEP 8 for Python) makes the code easier to read and understand, simplifying maintenance and collaboration.

- Automated Testing: Writing unit tests, integration tests, and end-to-end tests is critical. This helps identify errors early, improves code quality, and allows for regression testing when making changes.

- Configuration Files: Separating configuration settings (database credentials, API keys, file paths) from the core script makes it easier to adapt the script to different environments without modifying the code.

By following these practices, I create automation scripts that are not only efficient but also easy to understand, maintain, and scale as needed.

Q 18. How would you handle errors and exceptions in a production environment?

Robust error handling is crucial in production environments. My approach involves:

- Try-Except Blocks: Wrapping potentially problematic code within

try-exceptblocks is fundamental. This allows you to catch specific exceptions and handle them gracefully, preventing the script from crashing unexpectedly. - Specific Exception Handling: Instead of a generic

except Exception:, I catch specific exceptions (FileNotFoundError,TypeError,HTTPError, etc.) to provide targeted responses and avoid masking underlying issues. - Logging: Detailed logging (using Python’s

loggingmodule or a more sophisticated logging framework) is crucial. Logs record errors, warnings, and other important events, facilitating debugging and troubleshooting in a production environment. I typically log the error message, traceback, timestamp, and relevant context. - Alerting: For critical errors, I set up alerts (e.g., via email, SMS, or monitoring tools) to notify relevant personnel immediately. This ensures that problems are addressed promptly.

- Retry Mechanisms: For transient errors (e.g., network issues), I implement retry logic with exponential backoff. This gives the system time to recover before attempting the operation again.

Example: try: # Code that might raise an exception ... except FileNotFoundError as e: logging.error(f"File not found: {e}") # Handle the specific error ... except Exception as e: logging.critical(f"An unexpected error occurred: {e}", exc_info=True) # Log critical error with traceback ...

Q 19. How do you monitor and log the performance of your automation scripts?

Monitoring and logging script performance is critical for identifying bottlenecks and ensuring reliability. I typically employ these strategies:

- Performance Monitoring Tools: Tools like Prometheus, Grafana, Datadog, or even simple custom scripts can collect metrics such as execution time, resource usage (CPU, memory, network), and error rates. These metrics are essential for tracking script health and identifying performance regressions over time.

- Logging: As mentioned earlier, comprehensive logging is key. Include timestamps, execution durations, and resource usage in your logs. This information is crucial for post-mortem analysis of performance issues.

- Profiling: For performance optimization, I use profiling tools (like cProfile in Python) to identify performance bottlenecks within the script. Profiling helps pinpoint specific code sections that are consuming excessive resources.

- Metrics Dashboard: A centralized dashboard (often integrated with monitoring tools) provides a clear overview of key performance indicators. This allows for quick identification of any performance degradation or unusual patterns.

- Custom Metrics: I often include custom metrics specific to the task at hand. For example, if the script is processing data, metrics might include records processed per second, number of errors encountered, or average processing time per record.

By continuously monitoring and logging performance, I can proactively identify and address issues, maintaining the efficiency and reliability of my automation scripts.

Q 20. Describe your experience with cloud platforms (e.g., AWS, Azure, GCP) and their automation tools.

I have extensive experience with major cloud platforms like AWS, Azure, and GCP, leveraging their automation tools for various projects. My experience includes:

- AWS: I’ve used AWS services like EC2, Lambda, S3, and CloudWatch extensively. For automation, I utilize tools like AWS CLI, Boto3 (Python SDK), and CloudFormation for infrastructure-as-code.

- Azure: I’ve worked with Azure virtual machines, Azure Functions, Azure Blob Storage, and Azure Monitor. Azure CLI, Azure SDK for Python, and Azure Resource Manager (ARM) templates are my primary tools for automation.

- GCP: My experience with GCP includes Compute Engine, Cloud Functions, Cloud Storage, and Cloud Monitoring. I use the Google Cloud CLI, the Google Cloud Python client library, and Deployment Manager for automation tasks.

In cloud environments, I leverage serverless computing (Lambda, Azure Functions, Cloud Functions) for event-driven automation, reducing infrastructure management overhead. I also use infrastructure-as-code (IaC) tools to automate the provisioning and management of cloud resources, ensuring consistency and reproducibility. This minimizes manual configuration and allows for easy scalability.

Q 21. How do you secure your automation scripts and prevent unauthorized access?

Securing automation scripts and preventing unauthorized access is paramount. My approach involves several layers of security:

- Least Privilege Principle: Scripts should only have the minimum necessary permissions to perform their tasks. This limits the damage that could be caused by a compromised script.

- Secure Storage of Credentials: Never hardcode sensitive information (API keys, database passwords, etc.) directly into scripts. Instead, use secure methods like environment variables, dedicated secret management services (AWS Secrets Manager, Azure Key Vault, GCP Secret Manager), or configuration files stored securely outside the code repository.

- Input Validation: Always validate user inputs and data from external sources to prevent injection attacks (e.g., SQL injection, command injection). Sanitize inputs to remove potentially harmful characters.

- Access Control: Restrict access to the scripts and their associated resources (e.g., databases, servers) using appropriate permissions and access control lists (ACLs).

- Regular Security Audits: Conduct regular security reviews and penetration testing to identify potential vulnerabilities and ensure the security of the automation scripts and related infrastructure.

- Code Reviews: Code reviews by other developers help identify potential security issues before they reach production.

- Encryption: Encrypt sensitive data both in transit (using HTTPS) and at rest (using encryption at the database or storage level).

By implementing these security measures, I minimize the risk of unauthorized access and data breaches, ensuring the confidentiality, integrity, and availability of the automation systems.

Q 22. What are some common challenges you’ve faced in automation projects and how did you overcome them?

One common challenge in automation is handling unexpected errors and exceptions. Imagine automating a web scraping task – a website’s structure might change unexpectedly, causing your script to fail. To overcome this, I employ robust error handling techniques. This involves using try...except blocks in Python to gracefully catch specific exceptions (e.g., requests.exceptions.RequestException for network issues, ElementClickInterceptedException for Selenium web automation). I also implement logging mechanisms to record errors and their context, facilitating debugging and future improvements. Another challenge is maintaining data consistency across different systems. For example, if I’m automating data transfer between a database and a cloud storage service, inconsistencies might arise due to network issues or data transformation errors. To address this, I use checksum verification or database transactions to ensure data integrity. Furthermore, I always prioritize modular design, breaking down complex tasks into smaller, reusable functions. This makes testing, debugging, and maintaining the automation scripts significantly easier.

Q 23. Explain your experience with different types of databases and their interaction with automation scripts.

I have extensive experience with various database systems, including relational databases like MySQL and PostgreSQL, and NoSQL databases like MongoDB and Cassandra. My automation scripts often interact with databases for data extraction, transformation, and loading (ETL) processes. For example, I’ve used Python’s psycopg2 library to connect to PostgreSQL, execute SQL queries, and retrieve data for processing in an automation workflow. With NoSQL databases like MongoDB, I utilize the pymongo library for similar operations, leveraging JSON-like documents for flexibility. The choice of database depends heavily on the project’s requirements. Relational databases excel in structured data management with strong consistency guarantees, while NoSQL databases are better suited for large volumes of unstructured or semi-structured data and offer higher scalability. In my scripts, I carefully handle database connections, ensuring efficient resource management by closing connections promptly and using connection pooling where appropriate to improve performance.

Q 24. Describe your experience with working with APIs and integrating them into automation workflows.

Working with APIs is a core part of my automation expertise. I’ve integrated numerous APIs into my workflows, leveraging them for various tasks such as data retrieval, file uploads, user authentication, and triggering actions on external systems. For instance, I’ve used the requests library in Python to interact with RESTful APIs, making HTTP requests (GET, POST, PUT, DELETE) and parsing JSON or XML responses. Authentication often involves OAuth 2.0 or API keys, which I securely manage within my scripts, avoiding hardcoding sensitive information. When dealing with complex API interactions or rate limits, I implement retry mechanisms and exponential backoff strategies to ensure script robustness. One specific example involved automating the deployment of software to a cloud platform using their API. My script handled authentication, deployment configuration upload, and deployment status monitoring, all through API interactions. This greatly streamlined the deployment process, reducing manual effort and improving consistency.

Q 25. How do you ensure your automation scripts are efficient and optimized for performance?

Efficiency and optimization are paramount in automation. I prioritize several key strategies. First, I profile my scripts using tools like cProfile to identify performance bottlenecks. This often reveals inefficient algorithms or I/O operations. Then, I optimize code by using efficient data structures (like NumPy arrays for numerical computation) and algorithms. I also strive to minimize database queries and network requests, often caching frequently accessed data. Asynchronous programming, using libraries like asyncio, can significantly improve performance when dealing with I/O-bound operations such as network requests. For example, rather than waiting for each API request to complete sequentially, I can concurrently make multiple requests, substantially reducing overall execution time. Finally, regular code reviews and testing are crucial for identifying and eliminating performance issues early in the development cycle.

Q 26. How familiar are you with containerization technologies like Docker and Kubernetes?

I’m proficient with Docker and Kubernetes. Docker allows me to package my automation scripts and their dependencies into containers, ensuring consistent execution across different environments. This is invaluable for reproducibility and simplifies deployment. For example, I can create a Docker image containing my Python script, libraries, and database client, guaranteeing that the automation runs reliably on my local machine, a CI/CD server, or a cloud instance. Kubernetes takes containerization a step further, enabling orchestration and management of multiple containers across a cluster. This is crucial for scaling automation workflows and managing complex deployments. I’ve used Kubernetes to deploy and manage large-scale automation tasks, leveraging its features for automatic scaling, health checks, and rolling updates, ensuring high availability and resilience.

Q 27. What is your experience with Infrastructure as Code (IaC) tools like Terraform or Ansible?

I have significant experience with Infrastructure as Code (IaC) tools, primarily Terraform and Ansible. Terraform excels at managing infrastructure provisioning, allowing me to define and manage cloud resources (servers, networks, databases) declaratively through configuration files. This enhances consistency and repeatability in setting up environments for automation. Ansible, on the other hand, is powerful for configuration management and automation of tasks on existing infrastructure. I’ve used Ansible to automate the deployment and configuration of applications and services on servers, managing software installations, user accounts, and system settings. A recent project involved using Terraform to provision a cloud-based infrastructure for a data pipeline and then Ansible to configure the servers, install necessary software, and deploy the pipeline components. This combined approach enabled highly automated and reliable infrastructure management.

Q 28. Describe a complex automation project you have worked on and highlight your contributions.

A complex project I worked on involved automating the entire lifecycle of machine learning models. This encompassed data ingestion from various sources (databases, APIs, cloud storage), data cleaning and transformation using Spark, model training using TensorFlow, model evaluation and selection, and finally, deployment to a production environment using Docker and Kubernetes. My contributions included designing the overall automation pipeline, implementing the data processing and model training stages using Python and Spark, and developing the deployment infrastructure using Docker and Kubernetes. The automation reduced the model deployment time from several days to a few hours, significantly accelerating the iteration cycle and enabling faster feedback. The project also incorporated robust monitoring and logging, providing insights into the pipeline’s performance and helping identify potential issues promptly. This project showcased my ability to combine my skills in scripting, data processing, machine learning, and cloud technologies to deliver a highly automated and efficient solution.

Key Topics to Learn for Proficient in using Python or other programming languages for scripting and automation Interview

- Fundamental Programming Concepts: Master data types, control structures (loops, conditional statements), functions, and object-oriented programming principles. Understanding these core concepts is crucial for writing efficient and maintainable scripts.

- Python Libraries for Automation: Become familiar with libraries like `os`, `shutil`, `subprocess`, `requests`, and `BeautifulSoup` (or equivalents in your chosen language). Practice using these libraries to automate common tasks.

- File I/O and Data Manipulation: Learn how to read, write, and process various file formats (CSV, JSON, XML). Practice manipulating data using appropriate libraries and techniques.

- Regular Expressions (Regex): Mastering regex is invaluable for pattern matching and text processing within scripts. Practice constructing and using regular expressions to extract information from text data.

- Error Handling and Debugging: Learn effective strategies for identifying, diagnosing, and resolving errors in your scripts. This is a critical skill for any programmer.

- Version Control (Git): Demonstrate familiarity with Git for managing code changes and collaborating on projects. This is a highly sought-after skill in the industry.

- Testing and Best Practices: Understand the importance of writing clean, well-documented, and testable code. Familiarize yourself with testing frameworks and methodologies.

- Practical Application and Projects: Develop personal projects that showcase your automation skills. This could involve automating repetitive tasks, web scraping, or data processing.

Next Steps

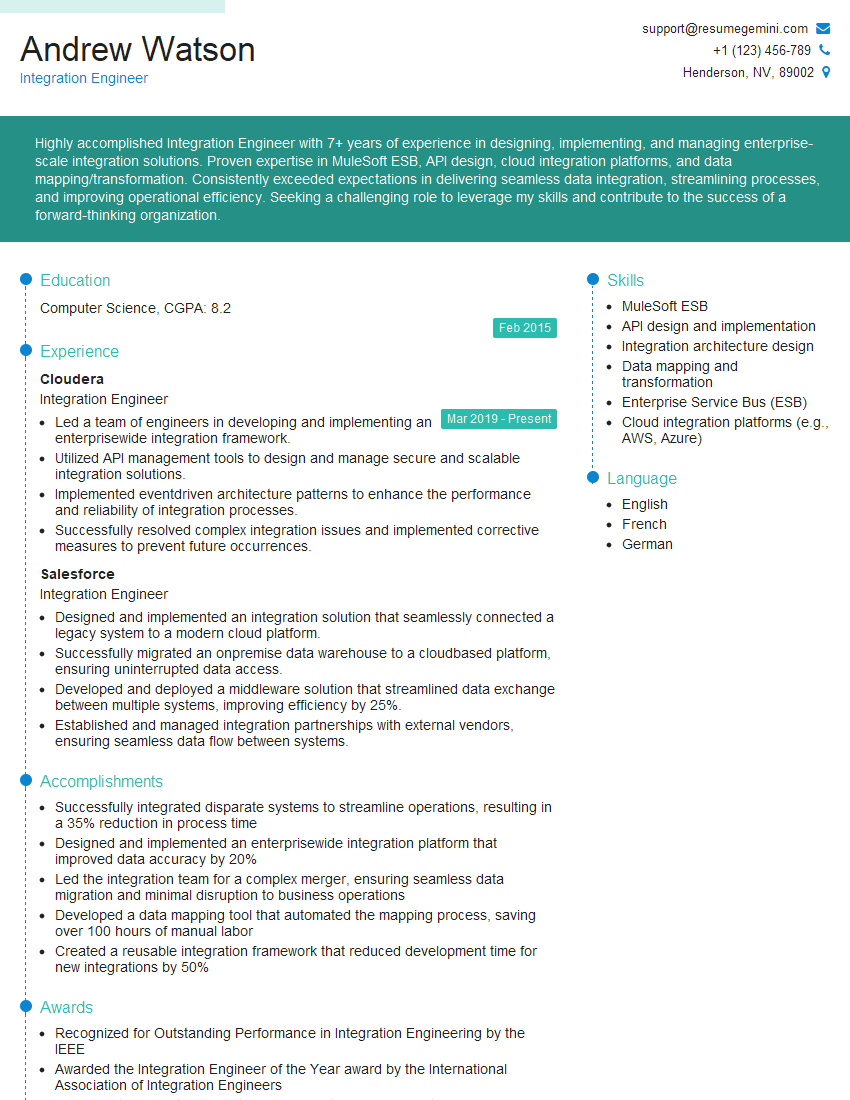

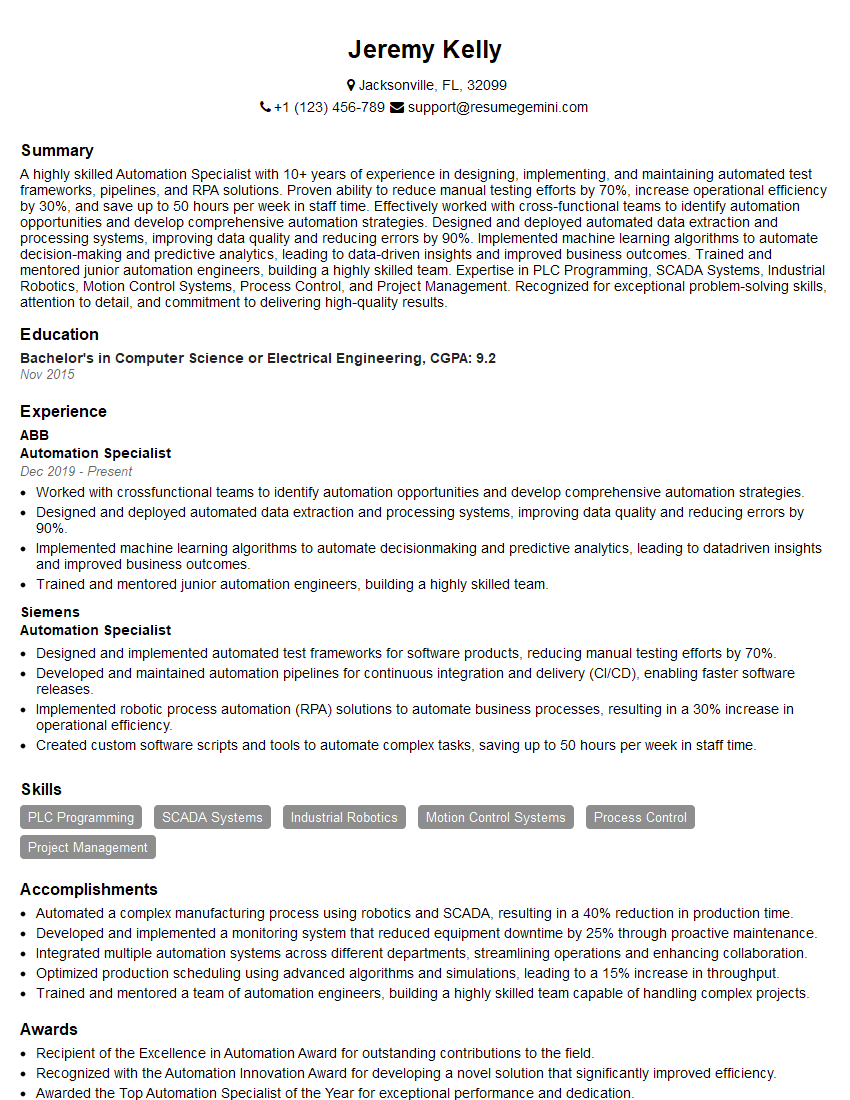

Mastering scripting and automation skills significantly enhances your career prospects, opening doors to roles with greater responsibility and higher earning potential. These skills are highly valued across various industries. To maximize your job search success, it’s vital to create a resume that effectively highlights your abilities. An ATS-friendly resume is crucial for getting past applicant tracking systems and landing interviews. We highly recommend using ResumeGemini to build a professional and impactful resume that showcases your expertise in Python or your chosen language for scripting and automation. ResumeGemini provides examples of resumes tailored to this specific skillset, helping you craft a compelling application that gets noticed.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good