Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Distributed Systems Design interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Distributed Systems Design Interview

Q 1. Explain CAP theorem and its implications for distributed system design.

The CAP theorem, short for Consistency, Availability, and Partition tolerance, is a fundamental limit in distributed data stores. It states that you can only guarantee two out of these three properties in a distributed system:

- Consistency: All nodes see the same data at the same time. Imagine a bank account balance; everyone should see the same amount.

- Availability: Every request receives a response (even if it’s an error message) without guarantee of the correctness of that response. Think of a website that’s always up, even if it might temporarily show stale information.

- Partition tolerance: The system continues to operate even when network partitions occur (nodes can’t communicate). This is crucial in a geographically dispersed environment where network disruptions are common.

The implication for distributed system design is that you must make a conscious choice regarding which two properties to prioritize. For instance, many cloud databases prioritize Consistency and Availability, making Partition tolerance their weak point. They might temporarily become unavailable during network outages to maintain consistency. A highly available system like a social media platform might prioritize Availability and Partition tolerance, sacrificing eventual consistency. A small data inconsistency might be acceptable to keep the system running.

Q 2. Describe different consistency models (e.g., strong, eventual) and their trade-offs.

Consistency models define how data is synchronized across multiple nodes in a distributed system. Different models offer trade-offs between consistency and availability.

- Strong Consistency: All nodes see the same data at the same time. Every read operation will return the most recent write. This is the simplest but most demanding model, often leading to performance bottlenecks. Think of a bank transaction – you need strong consistency.

- Sequential Consistency: The order of operations across all nodes appears as if there’s a single, sequential order. Less strict than strict consistency.

- Linearizability: A stricter form of strong consistency guaranteeing that operations appear atomic and ordered in real-time.

- Eventual Consistency: Data eventually becomes consistent, but there might be temporary inconsistencies. It’s very common in systems that prioritize availability over immediate consistency. Examples include email systems or cloud storage where you might not see a change immediately.

- Causal Consistency: If operation A causally precedes operation B (A’s result influenced B), then B will see A’s effects. This model balances consistency and availability.

The choice of consistency model depends on the application’s requirements. A financial system needs strong consistency, while a social media feed can tolerate eventual consistency.

Q 3. How would you design a distributed system for high availability?

Designing a highly available distributed system involves redundancy and fault tolerance at multiple layers.

- Replication: Duplicate data across multiple servers. If one server fails, others can take over. This requires careful consideration of consistency models.

- Load Balancing: Distribute requests across multiple servers to prevent overload on any single server. Techniques include round-robin, least-connections, or more advanced algorithms.

- Redundant Network Infrastructure: Use multiple network paths to prevent single points of failure. This ensures that communication remains possible even if one network segment fails.

- Automated Failover: Implement mechanisms that automatically switch to backup servers when a primary server fails. This minimizes downtime.

- Health Checks and Monitoring: Continuously monitor the health of servers and infrastructure. This allows for proactive intervention and faster recovery from failures.

- Geographic Distribution: Distribute servers across multiple data centers in different geographical locations. This protects against regional outages.

For example, a highly available web application might use a load balancer to distribute traffic across multiple web servers, with each server replicating its data to a separate database cluster. A robust monitoring system would detect failing servers, triggering automatic failover to backup instances.

Q 4. Explain different strategies for handling distributed transactions (e.g., two-phase commit, Paxos).

Distributed transactions ensure atomicity (all-or-nothing) across multiple nodes. Several strategies exist:

- Two-Phase Commit (2PC): A classic algorithm where a coordinator manages the transaction across multiple participants. It involves a prepare phase and a commit phase. However, it’s susceptible to blocking in case of coordinator failure. It’s simple but can be slow and prone to blocking.

- Three-Phase Commit (3PC): Improves upon 2PC by reducing blocking scenarios, but still faces challenges with network partitions.

- Paxos: A consensus algorithm that enables nodes to agree on a single value despite failures and network partitions. It’s used for fault-tolerant distributed state machines, which can be used to implement distributed transactions.

- Raft: A simpler alternative to Paxos, also focused on achieving consensus and ensuring data consistency across a distributed system. It’s easier to understand and implement than Paxos.

- Saga Pattern: This approach decomposes a large transaction into a series of smaller, local transactions. If a local transaction fails, compensating transactions are executed to undo the partial effects of previous transactions.

The choice depends on the system’s requirements and tolerance for failures. 2PC is simpler but can be less robust, while Paxos/Raft offers higher fault tolerance but is more complex.

Q 5. How do you ensure data consistency in a distributed environment?

Ensuring data consistency in a distributed environment is a complex challenge. Strategies include:

- Data Replication: Replicating data across multiple servers enables fault tolerance and improved read performance, but complicates maintaining consistency.

- Versioning: Assigning version numbers to data enables tracking changes and resolving conflicts. This is common in collaborative editing systems.

- Conflict Resolution Mechanisms: Defining strategies to handle conflicts when multiple updates occur simultaneously. This could involve last-write-wins, timestamp ordering, or more sophisticated merge algorithms.

- Consistent Hashing: A technique for distributing data across a cluster of servers in a way that minimizes data movement when the cluster size changes.

- Transactions: Using distributed transactions (like 2PC or saga pattern) to guarantee atomicity and consistency in complex operations.

The best approach is usually a combination of these techniques, tailored to the specific application’s needs and tolerance for inconsistencies.

Q 6. Discuss different approaches to distributed consensus.

Distributed consensus aims to achieve agreement among a set of nodes despite failures and network partitions. Several approaches exist:

- Paxos: A family of consensus algorithms known for its fault tolerance. It’s complex but very robust.

- Raft: A simpler and easier-to-understand alternative to Paxos, focusing on leader election and log replication.

- Zab (ZooKeeper Atomic Broadcast): A high-performance consensus protocol used in ZooKeeper, a distributed coordination service.

- Viewstamped Replication: Another fault-tolerant consensus algorithm focusing on state machine replication.

Each algorithm has its own strengths and weaknesses. Paxos is highly robust but complex, while Raft is more intuitive but might be less efficient in some scenarios. The choice depends on the specific needs of the distributed system.

Q 7. What are the challenges of building a globally distributed system?

Building globally distributed systems presents unique challenges:

- Network Latency and Partition Tolerance: High latency and network partitions are common issues, requiring careful consideration of consistency models and fault tolerance mechanisms.

- Data Synchronization: Maintaining data consistency across geographically dispersed nodes requires sophisticated techniques and strategies for handling conflicts.

- Global Clock Synchronization: Coordinating actions across different time zones requires robust clock synchronization techniques, as discrepancies can lead to inconsistencies.

- Data Sovereignty and Compliance: Storing data in different countries needs compliance with local data privacy and security regulations.

- Language and Cultural Differences: Internationalization and localization considerations become crucial for user interface design and content management.

- Cost and Complexity: Maintaining and operating a globally distributed system is significantly more expensive and complex than a localized one.

Careful planning, design, and robust error handling are vital for building successful globally distributed systems. It often involves choosing the right tools and technologies such as cloud services with built-in global distribution capabilities and fault tolerance.

Q 8. How would you handle failures in a distributed system?

Handling failures in a distributed system is paramount. It’s not a matter of if a failure will occur, but when. Our strategy needs to be proactive, encompassing prevention, detection, and recovery. Think of it like building a bridge: you wouldn’t just hope it stays up; you’d design it with redundancies and safety mechanisms.

- Prevention: This involves robust design choices from the outset. We use technologies like message queues with acknowledgements to ensure data delivery, and we design our services to be stateless or easily replicated.

- Detection: Heartbeat monitoring, health checks, and exception handling are crucial. Imagine a network of sensors; we need to constantly check that each sensor is functioning and reporting correctly. Tools like Nagios or Prometheus can help.

- Recovery: This is where fault tolerance truly shines. Strategies include automatic failover (switching to backup resources), retries (re-attempting failed operations), and circuit breakers (preventing cascading failures). For example, if a database node goes down, we should seamlessly switch to a replica.

A real-world example: Consider an e-commerce website. If the payment gateway fails, the entire site shouldn’t crash. Instead, we might display an error message, queue the transaction, and retry later, ensuring the user experience isn’t severely impacted.

Q 9. Explain the concept of fault tolerance and its importance in distributed systems.

Fault tolerance is the ability of a system to continue operating even when parts of it fail. In distributed systems, this is crucial because the very nature of distribution introduces multiple points of potential failure. Imagine a large airline reservation system; if one server goes down, the entire system shouldn’t collapse. That’s where fault tolerance comes in.

Its importance stems from the need for high availability and reliability. Downtime in a distributed system can be very costly – lost revenue, damaged reputation, and even legal repercussions. Fault tolerance mitigates this risk.

Techniques for achieving fault tolerance include redundancy (multiple instances of components), replication (multiple copies of data), and error detection/correction mechanisms. For instance, using RAID for data storage ensures data survivability even if a disk fails.

Q 10. Describe different strategies for data replication and their trade-offs.

Data replication is the process of making multiple copies of data and storing them in different locations. This improves availability, but introduces complexities. Several strategies exist:

- Master-Slave Replication: One master node handles writes; slaves replicate data. Simple, but the master is a single point of failure. Think of it as having one original document and several photocopies.

- Multi-Master Replication: Multiple nodes can accept writes, requiring conflict resolution strategies. More complex to manage but highly available. Imagine multiple authors collaboratively editing a document.

- Active-Active Replication: All replicas are active and handle both reads and writes. Highly available and scalable, but complex to implement and manage. Think of a distributed ledger like blockchain.

Trade-offs: Master-slave is simpler but less available; multi-master is more available but requires complex conflict resolution; active-active is the most complex but provides the highest availability. The best choice depends on the specific needs and constraints of the system.

Q 11. What are the benefits and drawbacks of microservices architecture?

Microservices architecture involves breaking down a large application into smaller, independent services that communicate with each other. Think of it like assembling a LEGO castle – each piece is a microservice, and they connect to create the whole.

- Benefits: Increased agility (faster development cycles), improved scalability (individual services can scale independently), better fault isolation (failure of one service doesn’t bring down the whole system), technology diversity (different services can use different technologies).

- Drawbacks: Increased complexity (managing many services is harder), distributed tracing (debugging across services can be challenging), data consistency (maintaining consistency across services requires careful design), and operational overhead (more services mean more monitoring and management).

For example, a large e-commerce platform might have separate microservices for user accounts, product catalog, shopping cart, and payment processing. Each can be developed, deployed, and scaled independently.

Q 12. How do you design a scalable distributed system?

Designing a scalable distributed system requires careful consideration of several factors. Scalability means the system can handle increasing load without significant performance degradation. It’s not just about adding more servers; it’s about designing for growth from the beginning.

- Horizontal Scaling: Adding more machines to the system. This is generally preferred over vertical scaling (upgrading individual machines).

- Statelessness: Designing services to be stateless (not storing data within the service) simplifies scaling and improves fault tolerance. Sessions and data should be stored externally.

- Load Balancing: Distributing requests evenly across multiple servers to prevent overload on any single machine.

- Asynchronous Communication: Using message queues or other asynchronous communication methods prevents blocking and improves responsiveness.

- Caching: Storing frequently accessed data in a cache to reduce database load.

For instance, a social media platform needs to handle millions of users. Scaling horizontally with load balancing and caching allows it to serve these users without performance issues.

Q 13. Explain the concept of sharding and its benefits.

Sharding is a technique for horizontally partitioning a large database across multiple machines. Imagine slicing a pizza into several pieces – each slice is a shard. This distributes the database load across multiple servers, improving scalability and performance.

Benefits: Improved scalability (each shard can be scaled independently), reduced latency (data is closer to the user), improved availability (failure of one shard doesn’t affect the whole database). A well-designed sharding strategy is crucial for large-scale applications handling massive data volumes.

However, sharding introduces complexities like data consistency and managing the distribution of data across shards. Choosing a good sharding key is crucial to minimize data skew and ensure even distribution.

Q 14. How would you design a system to handle high-volume traffic?

Handling high-volume traffic requires a multi-pronged approach focusing on both infrastructure and application design.

- Load Balancing: Distribute traffic evenly across multiple servers using a load balancer. Consider techniques like round-robin, least connections, or IP hash.

- Caching: Use various caching layers (CDN, server-side cache, database cache) to reduce the load on backend systems. Caching frequently accessed data significantly improves response times.

- Asynchronous Processing: Offload non-critical tasks to message queues or background processes to avoid blocking the main request thread.

- Database Optimization: Optimize database queries, schema design, and indexing to improve query performance. Consider using read replicas to reduce the load on the primary database.

- Horizontal Scaling: Scale out by adding more servers to handle increased load. Utilize auto-scaling capabilities to dynamically adjust the number of servers based on demand.

Consider a news website during a breaking news event. Load balancing distributes the traffic across web servers, caching reduces database load, and asynchronous processing handles tasks like social media updates in the background, ensuring the site remains responsive.

Q 15. What are some common design patterns used in distributed systems?

Distributed systems rely on several design patterns to manage complexity and achieve scalability. Let’s explore some common ones:

- Microservices: Breaking down a large application into smaller, independent services. This improves maintainability, scalability, and allows for independent deployments. Imagine an e-commerce site – separate services could handle user accounts, product catalogs, and order processing.

- Message Queues (e.g., Kafka, RabbitMQ): Decoupling services through asynchronous communication. Instead of direct calls, services exchange messages through a queue, improving resilience and allowing for varying service speeds. Think of it as a mailbox – services leave messages, and others pick them up when ready.

- Publish-Subscribe Pattern: One service publishes events, and other interested services subscribe to receive them. This allows for flexible communication and loose coupling. Imagine a stock ticker – services subscribe to receive updates on stock prices.

- Leader Election: In distributed systems requiring a single point of control (e.g., a distributed database), a leader election algorithm ensures one node is designated as the leader. This avoids conflicts and ensures consistency. Think of it like a team choosing a captain.

- Consistent Hashing: Distributes data across a cluster of nodes in a way that minimizes data movement when nodes are added or removed. It ensures load balancing and improves system stability. Imagine distributing website traffic across multiple servers – consistent hashing minimizes disruptions as servers are added or removed.

The choice of pattern depends on the specific needs of the system, balancing factors like consistency requirements, scalability needs, and fault tolerance.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with distributed tracing and logging.

Distributed tracing and logging are crucial for understanding the behavior of a distributed system. My experience involves using tools like Jaeger and Zipkin for tracing, and ELK stack (Elasticsearch, Logstash, Kibana) for centralized log management.

Tracing provides a view of requests as they propagate through multiple services, showing latency at each step. This allows for quick identification of bottlenecks. I’ve used this to pinpoint slow database queries affecting overall system performance. Logging, on the other hand, provides detailed information about the state of individual services, including errors and exceptions. Properly structured logs with contextual information (e.g., timestamps, request IDs, user IDs) are essential for effective debugging.

A key aspect is correlating logs and traces using unique request IDs, allowing us to follow the path of a request across multiple services and connect related log entries. In one project, this approach helped us quickly pinpoint the source of an intermittent error affecting a specific user, which was buried in a sea of logs from many different services.

Q 17. How do you monitor and manage a distributed system?

Monitoring and managing a distributed system requires a multi-faceted approach. Key aspects include:

- Metrics Collection: Gathering performance metrics (CPU usage, memory consumption, request latency, error rates) from each service using tools like Prometheus or Datadog.

- Alerting: Setting up automated alerts for critical thresholds, ensuring timely notification of issues. I’ve implemented alert systems that trigger notifications via email and Slack.

- Centralized Logging: Aggregating logs from all services for centralized analysis and troubleshooting. The ELK stack is a powerful tool for this.

- Distributed Tracing: As mentioned earlier, tracing is essential for understanding request flows and identifying bottlenecks.

- Health Checks: Regular health checks to ensure each service is running correctly. These checks are often incorporated into service discovery mechanisms.

- Dashboards: Creating dashboards visualizing key metrics, providing a holistic view of the system’s health. These dashboards need to be custom-built or tailored based on system requirements.

Proactive monitoring and a well-defined incident management process are crucial for maintaining system uptime and minimizing the impact of failures. This often involves regularly reviewing logs and metrics, performing capacity planning, and conducting regular stress tests.

Q 18. Explain different methods for achieving data consistency in a distributed database.

Achieving data consistency in a distributed database is challenging due to the inherent complexities of distributed environments. Several methods exist, each with trade-offs:

- Strong Consistency (e.g., Paxos, Raft): Guarantees that all nodes see the same data at the same time. This provides a simple programming model but can impact performance and availability. It’s typically used in situations where consistency is paramount, such as financial transactions.

- Weak Consistency: Offers a balance between consistency and availability. Data consistency is eventually achieved but not immediately. This approach is suitable for applications where eventual consistency is acceptable (e.g., social media updates).

- Sequential Consistency: Ensures that operations appear to execute sequentially, regardless of their actual execution order. This is a stricter form of weak consistency but still less stringent than strong consistency.

- Eventual Consistency: The most relaxed approach, where data consistency is achieved eventually after a period of time. It’s often used in systems with high write loads, where strong consistency would significantly impact performance. Example: Many cloud storage services use this approach, where eventually all replicas will have the same data.

The choice of consistency model depends heavily on the application’s requirements. Strong consistency provides simplicity but may limit scalability, while weaker models provide greater scalability but require careful consideration of potential inconsistencies.

Q 19. What are the challenges of debugging a distributed system?

Debugging distributed systems presents unique challenges compared to monolithic applications. The key difficulties include:

- Non-determinism: The order of operations in a distributed system can vary unpredictably, making it hard to reproduce issues.

- Lack of centralized state: Data and state are distributed across multiple nodes, making it difficult to obtain a complete view of the system’s state at a particular point in time.

- Network issues: Network latency, packet loss, and network partitions can significantly impact system behavior and complicate debugging.

- Partial failures: Failures can be partial and transient, making diagnosis difficult. Services might be intermittently unavailable, producing inconsistent results.

- Time synchronization: Ensuring consistent time across all nodes is crucial for accurate logging and tracing, but challenging to maintain in a distributed environment.

Effective debugging strategies include: using comprehensive logging and tracing, implementing robust monitoring and alerting, utilizing distributed debugging tools, and careful design choices that reduce complexity.

In practice, recreating the issue in a controlled environment (e.g., using a local cluster) can be useful. Analyzing logs and traces correlated with timestamps and unique identifiers helps pinpoint the source of problems. Step-by-step tracing through the system is crucial for identifying the chain of events leading to a bug.

Q 20. Discuss your experience with message queues (e.g., Kafka, RabbitMQ).

I have extensive experience with message queues, primarily Kafka and RabbitMQ. Both are powerful tools for asynchronous communication, but they cater to different needs:

Kafka: Is a high-throughput, distributed streaming platform. It excels at handling massive volumes of data and is ideal for real-time data pipelines and event streaming. I’ve used Kafka to build systems for processing logs, tracking user activity, and handling real-time analytics. Its durability and fault tolerance are key advantages.

RabbitMQ: Is a more general-purpose message broker that supports various messaging patterns (point-to-point, publish-subscribe). It’s suitable for a wider range of use cases, including task queues and complex routing scenarios. In past projects, I used RabbitMQ for asynchronous task processing and inter-service communication in applications where message ordering or delivery guarantees are critical.

The choice between Kafka and RabbitMQ depends on the specific use case. Kafka shines with high throughput and real-time processing, while RabbitMQ offers greater flexibility and message delivery guarantees. Considerations include message volume, message ordering requirements, and desired level of fault tolerance.

Q 21. How would you design a distributed caching system?

Designing a distributed caching system requires careful consideration of several factors. Here’s a possible approach:

- Caching Layer: A distributed cache like Redis or Memcached sits in front of your backend data sources.

- Cache Invalidation Strategy: Determining when and how to invalidate cached data. Strategies include cache-aside, write-through, write-back. Write-through offers strong consistency, but impacts performance. Write-back offers better performance but sacrifices consistency unless you account for consistency with additional logic. Cache-aside balances the two.

- Data Partitioning: Distributing data across multiple cache nodes to improve scalability and availability (e.g., consistent hashing). This approach prevents overload of a single cache node.

- Cache Replication: Replicating cached data across multiple nodes to provide fault tolerance. This ensures data availability even if a cache node fails.

- Data Serialization/Deserialization: Choosing appropriate serialization formats (e.g., JSON, Protobuf) for efficient storage and retrieval of cached data.

- Monitoring and Management: Implementing monitoring tools to track cache performance (hit ratio, eviction rate) and proactively manage the system’s health. Monitoring the number of items in the cache and adjusting the cache size based on usage is important.

A crucial aspect is selecting a suitable caching technology. Redis and Memcached offer different features and performance characteristics. Redis is more versatile, supporting various data structures, while Memcached is simpler and faster for basic key-value storage. The choice depends on the data structure and application requirements.

Q 22. What are some common performance bottlenecks in distributed systems?

Performance bottlenecks in distributed systems are multifaceted and often stem from interactions between various components. Think of it like a highway system – if one section is congested, the entire flow suffers.

- Network Latency: Communication delays between nodes are a major culprit. This is particularly problematic with geographically dispersed systems. Imagine trying to have a real-time conversation with someone across the globe – the lag can be significant.

- I/O Bottlenecks: Slow disk access or inefficient data retrieval from databases can cripple performance. It’s like trying to build a skyscraper on a weak foundation.

- Concurrency Issues: Improperly managed concurrent requests can lead to contention for resources, slowing things down considerably. Picture a busy restaurant with only a few waiters – each order takes longer to process.

- Data Transfer Overhead: The sheer volume of data transferred between nodes can be overwhelming. This is like trying to send a massive file over a slow internet connection.

- Inefficient Algorithms: Poorly designed algorithms or inefficient data structures can lead to unnecessary computation, especially in large-scale systems. It’s like using a bicycle to transport a mountain of goods instead of a truck.

Identifying these bottlenecks requires careful monitoring, profiling, and analysis of system logs and metrics. Tools like distributed tracing systems are crucial for understanding the flow of requests and pinpointing problematic areas.

Q 23. How do you handle network partitions in a distributed system?

Network partitions are a fact of life in distributed systems. They occur when communication between parts of the system is severed. Imagine a power outage splitting a city in two – communication between the separated parts is impossible until the outage is resolved. Handling them requires robust strategies, usually involving techniques like:

- Quorum-based Approaches: Requiring a minimum number of nodes to be available for an operation to succeed. This helps ensure data consistency even with some nodes unavailable. If we need at least 3 out of 5 servers to confirm a transaction and only 2 are available due to partition, the transaction is delayed.

- State Replication: Replicating data across multiple nodes to tolerate failures. If one node becomes unreachable due to partition, data can still be accessed from the replicas.

- Conflict Resolution Mechanisms: Implementing strategies to deal with conflicting updates made by different parts of the system during a partition. This may involve versioning, timestamps, or conflict resolution algorithms.

- Fault Tolerance Design: Designing systems that can continue operating with degraded performance even with parts of the network unavailable. This includes using techniques like circuit breakers to prevent cascading failures.

The specific approach depends heavily on the application’s needs for consistency and availability. Choosing between eventual consistency and strict consistency is a crucial design decision.

Q 24. Explain the difference between synchronous and asynchronous communication in a distributed system.

Synchronous and asynchronous communication are two fundamental paradigms in distributed systems. Think of it as the difference between making a phone call (synchronous) and sending an email (asynchronous).

- Synchronous Communication: Involves a direct, real-time interaction between two components. The sender waits for a response from the receiver before proceeding. This is like a conversation – you have to wait for your turn to speak and listen for the other person’s response. Examples include RPC (Remote Procedure Call) and RESTful APIs with blocking calls.

- Asynchronous Communication: The sender doesn’t wait for a response. It sends a message and continues processing other tasks. The receiver processes the message at its own pace. This is like sending an email – you don’t wait for the recipient to respond immediately. Examples include message queues (e.g., RabbitMQ, Kafka), event buses, and publish-subscribe systems.

The choice between synchronous and asynchronous communication depends on the specific requirements of the application. Synchronous communication is often simpler to implement but can be less resilient and more prone to performance bottlenecks. Asynchronous communication provides better scalability, fault tolerance, and decoupling but requires more careful handling of message ordering and error handling.

Q 25. Discuss your experience with various distributed consensus algorithms (e.g., Raft, Paxos).

I have extensive experience with various distributed consensus algorithms. Raft and Paxos are the most prominent examples, both aimed at achieving agreement among a group of nodes in a distributed system – like a committee reaching a unanimous decision.

- Paxos: Known for its theoretical elegance and rigorous guarantees but can be complex to implement. It’s a family of algorithms, making it even more challenging in practice.

- Raft: Designed to be more practical and easier to understand than Paxos. It achieves similar guarantees using a simpler, more intuitive leader-based approach. Its clear structure makes it easier to debug and maintain.

In my previous role, we used Raft to build a highly available distributed database. We chose Raft due to its relative simplicity and the availability of well-documented libraries. The simplicity made it easier for the team to understand, debug, and maintain the system.

Understanding the tradeoffs between these algorithms (and others like Zab used in ZooKeeper) is crucial for selecting the right tool for the job. Factors like the system’s size, complexity, and required consistency guarantees all play a role.

Q 26. How do you ensure security in a distributed system?

Ensuring security in a distributed system is paramount. It’s like guarding a sprawling castle – you need multiple layers of defense. Key strategies include:

- Authentication and Authorization: Verify the identity of users and processes attempting to access the system, and control their access rights. This is akin to using passwords and keycards to control access to the castle.

- Data Encryption: Protect data both in transit and at rest using encryption algorithms. This is like using strong locks and vaults to secure valuables.

- Secure Communication Channels: Use HTTPS or other secure protocols to encrypt communication between nodes. This prevents eavesdropping on conversations within the castle.

- Input Validation: Sanitize all user inputs to prevent injection attacks (SQL injection, cross-site scripting). This prevents sabotage or infiltration from outside.

- Regular Security Audits and Penetration Testing: Regularly assess the security posture of the system to identify vulnerabilities and strengthen defenses. This is like routinely inspecting the castle walls for weaknesses.

- Secure Configuration Management: Implement secure configurations for all components of the distributed system. This is like setting up and enforcing strict security protocols and procedures.

Security is an ongoing process, not a one-time fix. Continuous monitoring, patching, and updates are essential to maintain a secure distributed system.

Q 27. Describe your experience with containerization technologies (e.g., Docker, Kubernetes) in a distributed environment.

Containerization technologies like Docker and Kubernetes are essential for building and managing distributed systems. They provide a consistent and efficient way to package, deploy, and scale applications.

- Docker: Provides a lightweight, portable way to package applications and their dependencies into containers. Think of it like creating a self-contained apartment for each application.

- Kubernetes: An orchestration platform for managing containers across a cluster of machines. This is like having a property management company that handles the distribution and maintenance of these apartments.

In my previous projects, we leveraged Docker and Kubernetes to manage hundreds of microservices across a large cloud infrastructure. Kubernetes’s features like automatic scaling, self-healing, and rolling updates were crucial for ensuring system stability and resilience. Using containerization allowed for faster deployment, simplified management, and improved resource utilization, making the overall system far more efficient and manageable.

Q 28. Explain how you would approach designing a system for eventual consistency.

Designing a system for eventual consistency means accepting that data may not be immediately consistent across all nodes, but it will eventually become consistent. It’s like accepting that while different offices of a large company may have slightly different versions of a report initially, they will eventually converge on the same version.

The approach involves:

- Choosing the right data replication strategy: Strategies like gossip protocols or asynchronous replication are well-suited for eventual consistency. Gossip protocols, for instance, use peer-to-peer communication for data propagation.

- Defining conflict resolution strategies: Decide how to handle conflicting updates. Last-write-wins, conflict-free replicated data types (CRDTs), and custom conflict resolution logic are common approaches.

- Managing consistency guarantees: Clearly define the level of consistency acceptable for the application. Understanding the trade-offs between consistency and availability is crucial.

- Using appropriate data models: Certain data models are better suited for eventual consistency than others. NoSQL databases often fit this model more naturally than traditional relational databases.

Eventual consistency is a powerful tool when high availability and scalability are paramount, as seen in systems like email, social media, and large-scale data processing pipelines. It’s important to remember that carefully defining consistency expectations is key to avoid surprises in the application behavior.

Key Topics to Learn for Distributed Systems Design Interview

- Consistency and Fault Tolerance: Understand different consistency models (e.g., strong, eventual) and how to design systems that handle failures gracefully. Explore techniques like replication, consensus algorithms (Paxos, Raft), and distributed transactions.

- Data Partitioning and Replication: Learn how to effectively partition large datasets across multiple nodes, manage replication strategies for high availability and scalability, and understand the trade-offs between consistency, availability, and partition tolerance (CAP theorem).

- Distributed Consensus and Coordination: Grasp the challenges of achieving agreement in a distributed environment. Explore leader election algorithms and techniques for managing distributed locks and semaphores.

- Microservices Architecture: Understand the principles of microservices, including service discovery, inter-service communication (REST, gRPC), and API gateways. Be prepared to discuss design patterns for building robust and scalable microservices.

- Networking and Communication: Familiarize yourself with different network protocols and communication patterns used in distributed systems. Understand concepts like TCP/IP, UDP, and message queues.

- Security in Distributed Systems: Explore security considerations relevant to distributed systems, including authentication, authorization, encryption, and data protection.

- Practical Application: Consider real-world examples like designing a distributed database, a high-throughput messaging system, or a cloud-based application. Think about scalability, performance, and maintainability.

- Problem-Solving Approach: Practice breaking down complex problems into smaller, manageable components. Develop a structured approach to designing and implementing distributed systems, considering various trade-offs and constraints.

Next Steps

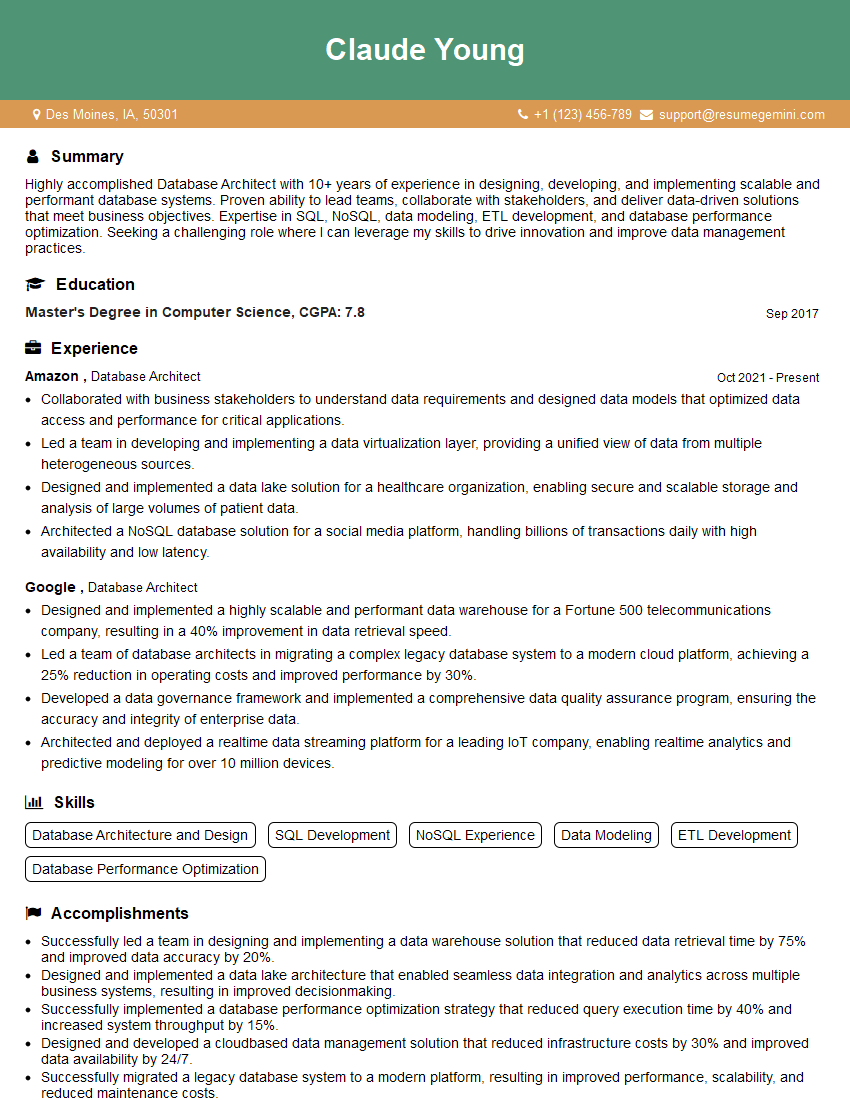

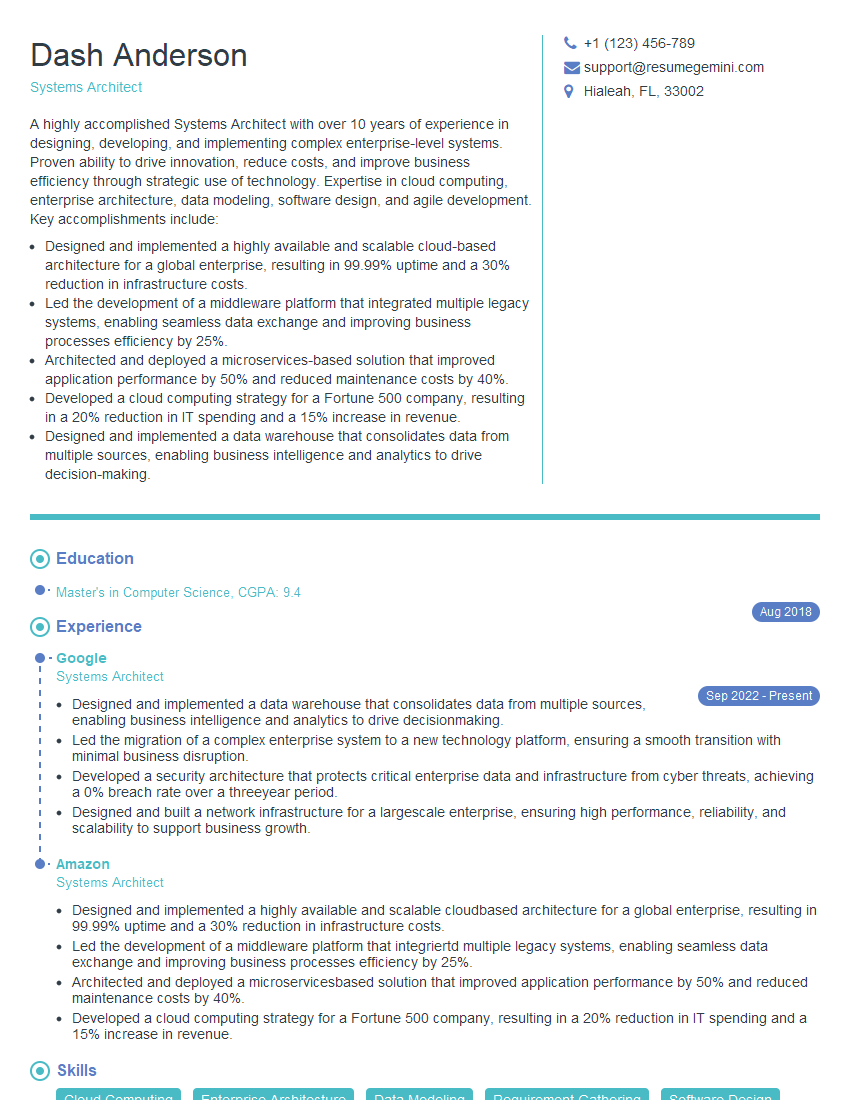

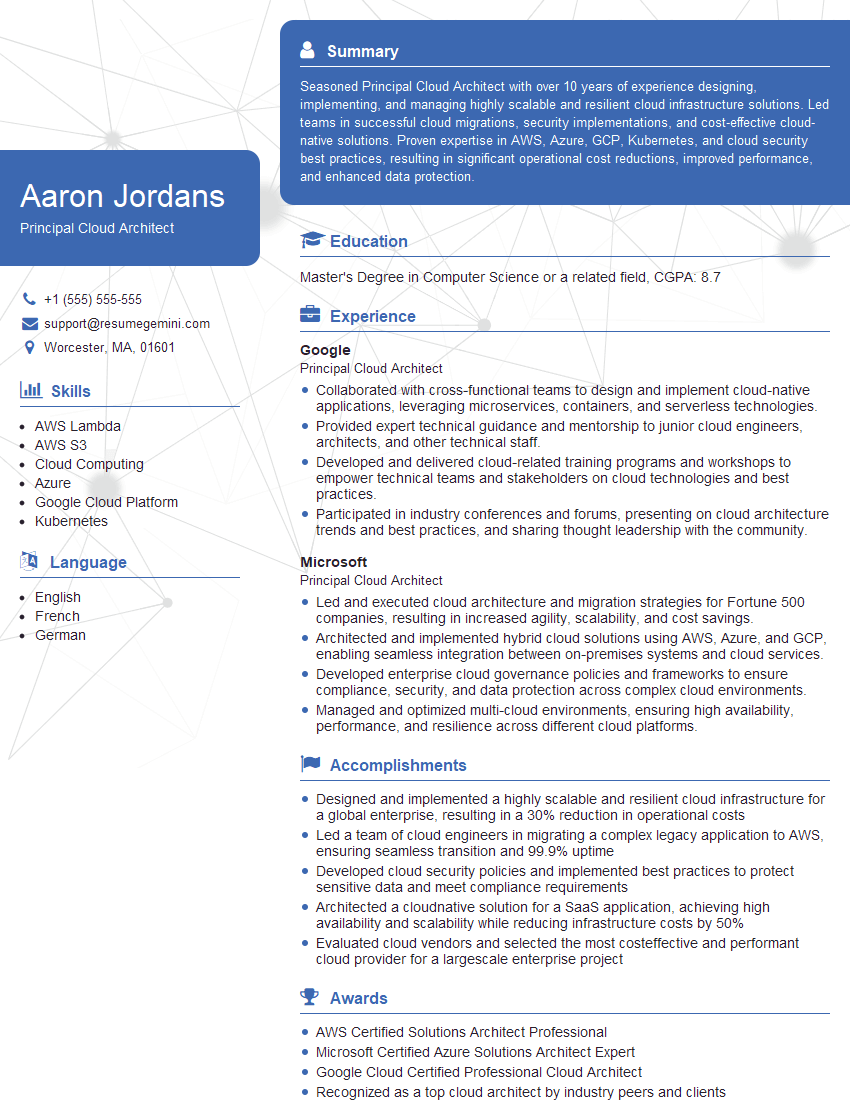

Mastering Distributed Systems Design is crucial for career advancement in today’s technology landscape. It opens doors to exciting opportunities in high-growth companies and positions you as a sought-after expert in building scalable and resilient applications. To maximize your job prospects, crafting an ATS-friendly resume is essential. ResumeGemini can significantly enhance your resume-building experience, helping you present your skills and experience effectively to potential employers. ResumeGemini provides examples of resumes tailored to Distributed Systems Design, giving you a head start in creating a compelling application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good